The world of AI is rapidly evolving, and while cloud-based AI services offer convenience, they come with limitations—privacy concerns, unpredictable costs, and dependency on third-party providers. For developers who want full control over their AI workflows, self-hosting is an excellent alternative.

Recently, we explored setting up a self-hosted AI environment using n8n (a powerful workflow automation tool) and Pinggy (for secure remote access). Here’s how the experience went, along with key takeaways for fellow developers.

Why Self-Host AI Agents?

Cloud-based AI APIs are great, but they have drawbacks:

- Privacy risks – Sensitive data leaves your infrastructure.

- Cost unpredictability – Usage-based pricing can get expensive.

- Limited flexibility – You’re stuck with the provider’s models and rate limits.

By running AI models locally, we retain full control over data, costs, and customization.

The Setup: n8n + Ollama + Qdrant

The n8n Self-hosted AI Starter Kit bundles everything needed:

- n8n – For workflow automation.

- Ollama – To run local LLMs (like Llama3, Mistral).

- Qdrant – A vector database for embeddings.

- PostgreSQL – Persistent storage for workflows.

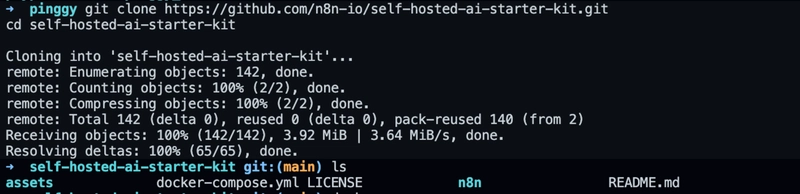

Step 1: Getting Started

After installing Docker and Docker Compose, the setup was straightforward:

git clone https://github.com/n8n-io/self-hosted-ai-starter-kit.git

cd self-hosted-ai-starter-kit

docker compose --profile cpu up # For CPU-only machines

For those with NVIDIA GPUs, using --profile gpu-nvidia significantly speeds up inference.

Step 2: Accessing n8n

Once the containers were running, n8n was accessible at:

http://localhost:5678/

The first login required setting up an admin account. The starter kit includes a pre-built AI workflow, which was a great way to test the setup.

To try it out:

- Click the "Chat" button at the bottom of the workflow canvas.

- On the first run, the system will automatically download the Llama3 model—this might take a few minutes depending on your internet speed.

Once the model is loaded, you can start interacting with the AI directly in the chat interface.

Step 3: Running Local LLMs with Ollama

The workflow triggered Ollama to download the Llama3 model (which took some time, depending on internet speed). After that, it was possible to interact with the AI directly from n8n.

Exposing n8n Securely with Pinggy

While running locally is fine for testing, remote access is often needed. Pinggy made this simple with SSH tunneling.

Basic HTTP Tunnel

ssh -p 443 -R0:localhost:5678 a.pinggy.io

This generated a public URL (e.g., https://xyz123.pinggy.link) that forwarded traffic to the local n8n instance.

Adding Authentication

For security, basic auth was added:

ssh -p 443 -R0:localhost:5678 -t a.pinggy.io b:username:password

This ensured only authorized users could access the instance.

Building AI-Powered Workflows

With everything running, it was time to experiment with different AI use cases:

1. Chatbot with Memory

- Used Postgres Chat Memory to retain conversation history.

- Connected Ollama for natural responses.

2. Document Summarization

- Processed PDFs with text-splitting nodes.

- Generated embeddings with Ollama and stored them in Qdrant.

- Created summaries using a summarization chain.

3. AI-Enhanced Data Processing

- Fetched data via HTTP requests.

- Used AI Transform nodes to classify and enrich data.

- Sent results via email/webhook.

Security Considerations

Since the setup was self-hosted, security was a priority:

- Enabled authentication on Pinggy tunnels.

- Restricted access via IP whitelisting (where possible).

- Kept containers updated to avoid vulnerabilities.

Troubleshooting

A few hiccups occurred:

Ollama Model Download Issues

Sometimes, the model failed to download automatically. Manually pulling it fixed the issue:

docker exec -it ollama ollama pull llama3:8b

n8n-Ollama Connection Errors

If n8n couldn’t reach Ollama, verifying the credentials configuration in n8n helped. The base URL needed to be set correctly:

- For Docker:

http://ollama:11434/ - For local Mac:

http://host.docker.internal:11434/

Conclusion

Self-hosting AI agents with n8n and Pinggy proved to be a powerful, flexible, and cost-effective approach. The ability to run local LLMs, automate workflows, and securely expose services makes this setup ideal for developers who prioritize privacy and control.

For anyone looking to experiment with AI automation without relying on cloud APIs, this is a fantastic starting point. The n8n starter kit simplifies the process, and Pinggy ensures secure remote access with minimal effort.

References

Top comments (1)

Some comments may only be visible to logged-in visitors. Sign in to view all comments.