One of the challenges with Power Automate is the lack of robust exception handling.

Currently you have 2 options:

- Handle exceptions in each flow - for more check out Exception Handling in Power Automate and Everything you didn't know you needed to know about Power Automate Errors

- Use alerts in Application Insights Power Automate - Flow Logging in App Insights

The latter is the best as it can be done centrally, but it requires additional Azure resources and misses additional logic I need.

So as always, I built my own within the Power Platform.

- Requirements

- Design

- Building the Flow

- End Solution

1. Requirements

The Key Requirements I needed:

- Multiple escalation routes depending on what dev team

- Only report process fail once per day (not 1000 fails = 1000 tickets

- In platform (avoid dependencies)

So in my solution you can either send exception email, add to SharePoint list, add to Dataverse list, or call an API.

The reason I chose this mix is because each team has their own escalation process, SharePoint and Emails cover everyone (and allow additional flows to be built on top of), adding in Dataverse (for the Platform Admins) and a API (for ticketing systems like SNOW) covers the more IT side.

Callout, in my demo its a simple HTTP, with no auth, so probably not right, but you can swap it out for other connectors.

2. Design

The good news is now our flow runs are not just in Azure, they are now duplicated in an elastic table in Dataverse called Flow Runs.

Elastic tables are document (no sql) databases, they are designed for transactions with no relationships. But in nearly every way we treat them same as a normal sql table.

Unfortunately there is a problem, our normal Dataverse trigger on item created does not work on the Flow Run table (I've had it work on other Elastic tables so I'm guessing it's to stop infinite loops, flow triggers log triggers flow etc).

Even automated Low-code plug-ins don't work

So we need to change our approach and do a scheduled 'List Items'. The table is at an environment level, so we need to loop over every environment and grab every run.

Our next issue is the first requirement, how do we enable every dev team to have their own escalation process. We have SharePoint, Outlook, and API, but how de we link the flow to the escalation process. Here we are going to use one of the most underutilized features in Power Automate, Publishers.

Publishers don't just have name and prefix, they have multiple other fields that we can use.

The plan is then, developers setup publisher with following optional fields:

- Email: Outlook escalation email

- Website: HTTP endpoint

- Street 1: SharePoint Site url

- Street 2: SharePoint List Id

If the flow finds any of the above values then it will trigger that escalation process based on the values.

Next piece is linking the flow to the publisher, so we need to do some hops from a few Dataverse tables:

The final issue is to only report once per timespan (in my case per day). So if a flow failed 1000 times there would only be 1 escalation per day. To do this im going to use a Dataverse table, this also has the added benefit of tracking any exception where the developer hasn't filled in the publisher.

The table has the following fields (which are the same for SharePoint).

- Flow: Flow Name

- Error: JSON error message

- RunId: Runid (used to create run link)

- RunLink: Url to open run log

- Solution: Solution Name

- Publisher: Publisher Name

- FlowId: Used to create run link and to check for duplicate escalations

- Create On: Duplicate escalation date range

3. Building the Flow

There will be 2 flows, the scheduler and the logger. The logger will be the childflow and have all of the complexity.

Logger

We don't want to get duplicate escalations, so first we need to do is check the exception table for any current exceptions today.

We only need the workflowid, and that's because the plan is to use the workflowid in the get failed runs.

_workflow_value ne {workflowid}

The challenge is how do we create that query for ever flow already escalated.

After getting workflowid's we then use a select to convert the response to a one dimensional array

[{workflowid},{workflowid},{workflowid},{workflowid}]

Unfortunately there is no concatenation() expression in Power Automate, but there is in Power FX, so we can use a Low-Code Plug-in/Dataverse Function (for more info check out my 3 blog series starting here Functions in Dataverse & Low-Code Plug-ins 101)

The plug-in only accepts strings, so we need to convert the array to a string, and to make it easier remove the [ and ]. We do that using the string() and substring() (removes first and last character, which is the [ and ]) expression. The last thing we want to do is remove any duplicates (incase there was multiple failures in the time window), that's done with the union() expression.

substring(

string(union(body('Select_Flow_Name'),body('Select_Flow_Name')))

,

1

,

sub(length( string(union(body('Select_Flow_Name'),body('Select_Flow_Name')))),2)

)

The Power FX code in the Low-Code plug-in then splits the string by a comma, and then concatenate it back together with the query filter:

"and _workflow_value ne" & {workflowid}

With({

filter:Concat(Split(flowIds,",")," and _workflow_value ne "&Value,"")

},

If(filter=" and _workflow_value ne ",

{query:""}

,

{query:filter}

)

)

The plug-in then returns the filter query which we can pass to the get failed flow runs (along with the time window condition and failed status).

Next we are going to loop over every failed run, but what if there has been multiple failures, we don't want to log everyone. So we use a variable and condition, every workflowid gets appended to the variable, and we check to see if the variable contains the next workflowid.

We filter the solutioncomponents table to find the solution from the workflowid (named objectid in the table). As the workflow can also be in the Default and Common Dataservices solution we need to filter them out.

objectid eq '@{items('Apply_to_each')?['workflowid']}' and solutionid/friendlyname ne 'Common Data Services Default Solution' and solutionid/friendlyname ne 'Default Solution'

Instead of doing 3 List Items, we can use the $expand Odata parameter. This brings all the fields of a lookup column, and what's cool is you can do nested (so we can expand a expand, in this case publisher in solution).

So we use the solution component table to get everything but the flow name (as the objectid is not a lookup field).

Expand Query

solutionid($expand=publisherid($select=friendlyname,publisherid,address1_line1,address1_line2,emailaddress))

To get the flow name we just use the 'Get a row by ID from selected environment' action, as the workflowid is the row id.

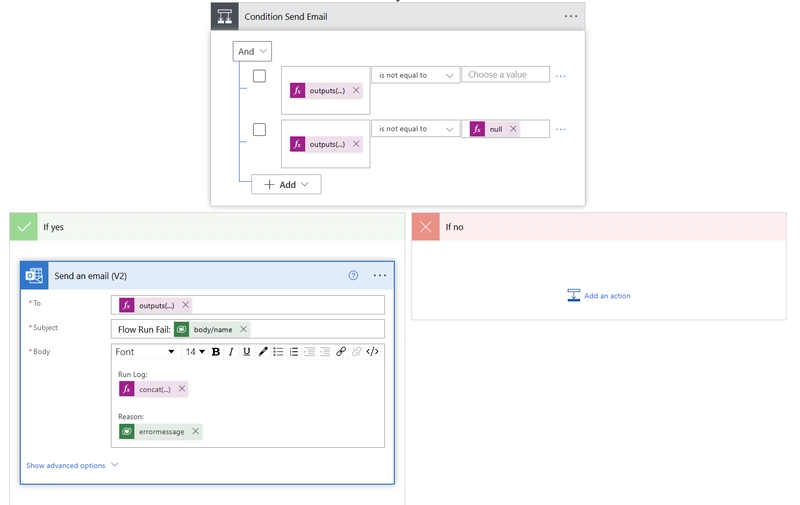

We then check to see if the publisher fields are null or blank, if not we can follow the escalation process:

SharePoint

The final step is to create the entry in the Dataverse Exception table and append to the variable to stop duplicate escalations.

4. End Solution

And that's it, the scheduler flow loops over all environments (I set up the account to only have access to prod, or you could add a filter), which passes the Dataverse Url, environment Id, and the time window to the logger flow. In my case I pass 15 minutes, so the scheduler runs every 15 minutes, and the logger gets all failed runs in the last 15 minutes.

Quick call out, the daily API's can stack up quickly, depending on the number of environments and average failure rate. I did a rough estimate based on 10 environments and 2 failed runs per 15 mins and it showed 14400 daily API calls, so the owner will most likely need a premium license (40k per day), or in big orgs flow only license (250k per day).

As always the export can be found in my GitHub here: New Exceptions - https://github.com/wyattdave/Power-Platform

If you would like to get notified every new blog (I also do a few in the Power Platform Community), subscribe below

Top comments (1)

this is super cool.. thanks for building and sharing the toolkit. .. Had an issue to build the monitoring / a circular dependency – basically if the flow table was tracked then input into the table would cause the trigger which in turn updates the flow table which triggers it again. Will have to explore the toolkit.. but looks amazing !!!