The promise of quantum machine learning (QML) lies in its potential to revolutionize how we process data and solve complex problems, offering computational advantages far beyond the reach of classical computers. However, realizing this potential in the current technological landscape is complicated by the inherent limitations of today's quantum hardware, a period often referred to as the Noisy Intermediate-Scale Quantum (NISQ) era. Navigating this noise is paramount for developing robust QML applications.

Understanding the NISQ Era

The term NISQ, coined by physicist John Preskill, describes quantum computers that have a limited number of qubits (typically 50-100) and are not yet robust enough to perform extensive error correction. These devices are characterized by several key limitations:

- Limited Qubit Count: The relatively small number of available qubits restricts the complexity of quantum algorithms that can be run. While classical bits are either 0 or 1, qubits can exist in a superposition of both states simultaneously, exponentially increasing computational power with each added qubit. However, current qubit counts are insufficient for truly fault-tolerant quantum computation.

- Short Coherence Times: Qubits are fragile and susceptible to environmental interference, which causes them to lose their quantum properties (decoherence) very quickly. This short coherence time means that quantum computations must be executed rapidly, limiting the depth and complexity of circuits.

- Inherent Noise: NISQ devices are inherently noisy. Operations on qubits, such as applying quantum gates or performing measurements, are not perfectly accurate. These errors accumulate, leading to inaccurate results and making it difficult to discern the true quantum signal from the noise.

These limitations profoundly impact QML algorithm performance. Algorithms designed for ideal, error-free quantum computers often fail or produce unreliable results when run on NISQ devices. The challenge is to design QML approaches that can deliver meaningful results despite these imperfections.

The Impact of Noise on QML

Noise in quantum computers manifests in various forms, each contributing to the degradation of QML algorithm performance:

- Decoherence: As mentioned, this is the loss of quantum coherence due to interaction with the environment. It leads to the collapse of superposition and entanglement, fundamental properties that give quantum computers their power.

- Gate Errors: When quantum gates (the building blocks of quantum circuits) are applied, they may not perform the intended operation perfectly. These errors can accumulate across multiple gates, leading to significant deviations from the desired quantum state.

- Measurement Errors: Errors can also occur when the state of a qubit is measured. A qubit that was supposed to be in a |0⟩ state might be measured as |1⟩, and vice-versa, introducing inaccuracies into the final results.

These noise sources can lead to inaccurate predictions, hinder the convergence of optimization algorithms, and ultimately prevent QML algorithms from demonstrating a "quantum advantage" over their classical counterparts. Without effective strategies to counteract noise, the potential of QML remains largely theoretical.

Hybrid Quantum-Classical Algorithms as a Solution

One of the most promising strategies for building robust QML applications in the NISQ era is the adoption of hybrid quantum-classical algorithms. These approaches cleverly divide the computational workload, leveraging the strengths of both quantum and classical processors while mitigating the weaknesses of NISQ devices.

In a hybrid framework, the quantum computer performs specific, computationally intensive tasks that benefit from quantum properties, such as preparing quantum states, encoding data into high-dimensional quantum feature spaces, or estimating quantum kernels. Crucially, these quantum computations are kept relatively shallow to minimize the impact of noise. The classical computer, on the other hand, handles tasks like optimization, parameter updates, and overall algorithm control, which are well-suited to classical processing and are less susceptible to quantum noise.

A prime example of this paradigm is the Variational Quantum Eigensolver (VQE) or Quantum Support Vector Machine (QSVM). In these algorithms, a parameterized quantum circuit (often called an "ansatz") is executed on the quantum hardware. The results of these quantum computations are then measured and fed back to a classical optimizer. The optimizer adjusts the parameters of the quantum circuit iteratively to minimize a cost function, effectively "training" the quantum model. This iterative feedback loop allows the classical processor to guide the quantum computation towards an optimal solution, even in the presence of noise.

Here's a conceptual Python-like pseudo-code snippet demonstrating a hybrid QML approach:

# Conceptual Python-like pseudo-code for a hybrid QML approach

from qml_library import QuantumCircuit, ClassicalOptimizer

def hybrid_qml_model(data, labels):

# 1. Quantum Feature Map (executed on quantum hardware)

quantum_features = QuantumCircuit.encode_data(data)

# 2. Classical Optimization Loop

optimizer = ClassicalOptimizer()

parameters = initialize_random_parameters()

for epoch in range(num_epochs):

# 3. Quantum Circuit Execution (on quantum hardware, with noise)

predictions = QuantumCircuit.run_model(quantum_features, parameters)

# 4. Classical Cost Calculation

cost = calculate_cost(predictions, labels)

# 5. Classical Parameter Update

parameters = optimizer.update_parameters(parameters, cost)

return trained_model

This hybrid approach mitigates noise by offloading complex, noise-sensitive optimization steps to classical processors, while the quantum hardware focuses on tasks where its unique capabilities can offer a potential advantage. This strategy is highlighted in various research, including a comprehensive review of integrating AI with quantum computing, which explores these hybrid frameworks in depth.

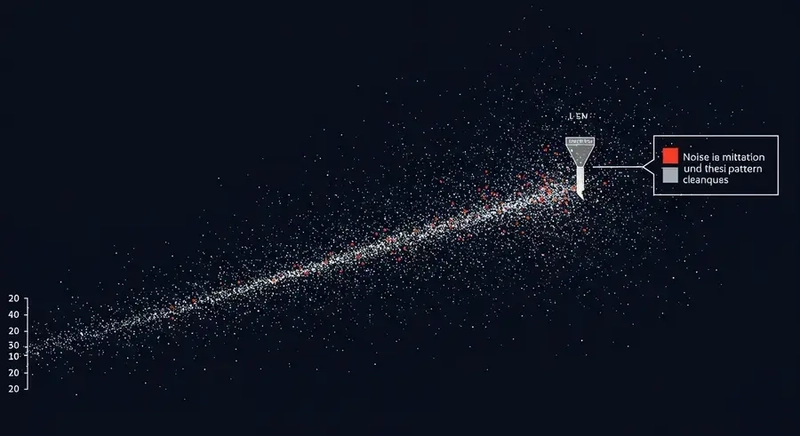

Noise Mitigation Techniques

Beyond hybrid algorithms, several specific techniques are being developed to reduce the impact of noise on NISQ devices without requiring full fault-tolerant quantum error correction, which is still a distant goal. These "error mitigation" techniques aim to extract more accurate results from noisy quantum computations.

- Measurement Error Mitigation: Errors often occur during the final measurement of qubits. Techniques like "measurement error calibration" involve running calibration circuits to characterize the measurement errors of each qubit and then using these characterizations to statistically correct the results of the main computation.

- Zero-Noise Extrapolation (ZNE): This technique involves running the same quantum circuit at several different, deliberately increased noise levels. By observing how the results degrade with increasing noise, one can extrapolate back to predict what the result would have been in the hypothetical "zero-noise" limit.

- Dynamic Decoupling: This involves applying carefully timed sequences of short, high-fidelity pulses to qubits during computation. These pulses effectively "flip" the qubit's state, canceling out the effects of environmental noise and preserving coherence for longer periods.

These techniques, while not eliminating noise entirely, significantly improve the quality of results obtained from NISQ hardware. For a deeper dive into these and other concepts, exploring resources like demystifying-quantum-ml.pages.dev can provide further clarity on the intricacies of quantum machine learning.

Here's a conceptual Python-like pseudo-code snippet illustrating noise simulation and mitigation:

# Conceptual Python-like pseudo-code for noise simulation and mitigation

from qml_library import QuantumCircuit, NoiseModel, apply_error_mitigation

# Define a simple quantum circuit

qc = QuantumCircuit(num_qubits=2)

qc.h(0)

qc.cx(0, 1)

# Simulate with a basic noise model

noisy_results = qc.run_with_noise(NoiseModel.realistic_nisq())

# Apply a measurement error mitigation technique

mitigated_results = apply_error_mitigation(noisy_results)

print(f"Noisy results: {noisy_results}")

print(f"Mitigated results: {mitigated_results}")

Practical Frameworks and Tools

The development of QML has been greatly aided by the emergence of open-source quantum computing frameworks that provide tools for building, simulating, and running QML algorithms on real quantum hardware or simulators. Popular examples include:

- Qiskit (IBM): A comprehensive framework for quantum computing, offering modules for circuit composition, simulation, and execution on IBM Quantum systems. It includes features for noise modeling and error mitigation. IBM Research actively contributes to the field of quantum machine learning, focusing on foundational research and practical applications.

- PennyLane (Xanadu): A software library for quantum machine learning, quantum chemistry, and quantum computing. It integrates with various quantum hardware and simulators and is particularly strong in automatic differentiation for optimizing quantum circuits.

- Cirq (Google): Google's open-source framework for creating, editing, and invoking quantum circuits. It's designed for NISQ hardware and allows for fine-grained control over quantum operations.

These frameworks empower researchers and developers to experiment with QML algorithms, simulate the effects of noise, and implement various noise mitigation techniques, accelerating progress in the field.

Real-World Applications and Current Limitations

Despite the challenges of the NISQ era, noise-robust QML is being explored across a range of promising application areas. In materials science, QML could accelerate the discovery of new materials with desired properties by simulating molecular structures. Drug discovery could benefit from QML's ability to model complex molecular interactions, potentially leading to new therapies. Financial modeling might see improvements in optimization and risk assessment, while image classification and pattern recognition could leverage quantum-enhanced feature spaces for better performance on complex datasets.

However, it's crucial to acknowledge that these applications are currently limited by the scale of NISQ devices. While quantum machine learning shows promise, especially for complex datasets with non-linear relationships, the small number of available qubits and the need for robust error correction restrict its application to smaller, more constrained problems. The computational resources required for simulating quantum algorithms on classical hardware are also substantial, as noted in a review of quantum machine learning, which observed that simulated QSVMs required significant memory and training time compared to classical SVMs. This underscores that the "quantum advantage" is still largely theoretical for many practical, large-scale problems.

The Road Ahead

The NISQ era is a critical stepping stone towards fault-tolerant quantum computing. Current efforts in noise mitigation and the development of hybrid quantum-classical algorithms are not just temporary fixes; they are crucial for understanding the fundamental challenges of quantum computation and for laying the groundwork for future, more powerful quantum systems. As quantum hardware continues to improve—with increasing qubit counts, longer coherence times, and lower error rates—the capabilities of QML will expand dramatically.

The future of QML will likely involve a gradual transition from noise-mitigated NISQ applications to truly fault-tolerant quantum algorithms. This evolution will unlock quantum computing's full potential, enabling transformative breakthroughs across science and industry. The ongoing research in quantum machine learning, as detailed in various surveys, emphasizes the need for scalable, noise-resistant hardware and more efficient training algorithms to bridge the gap between theoretical promises and practical applications.

Top comments (0)