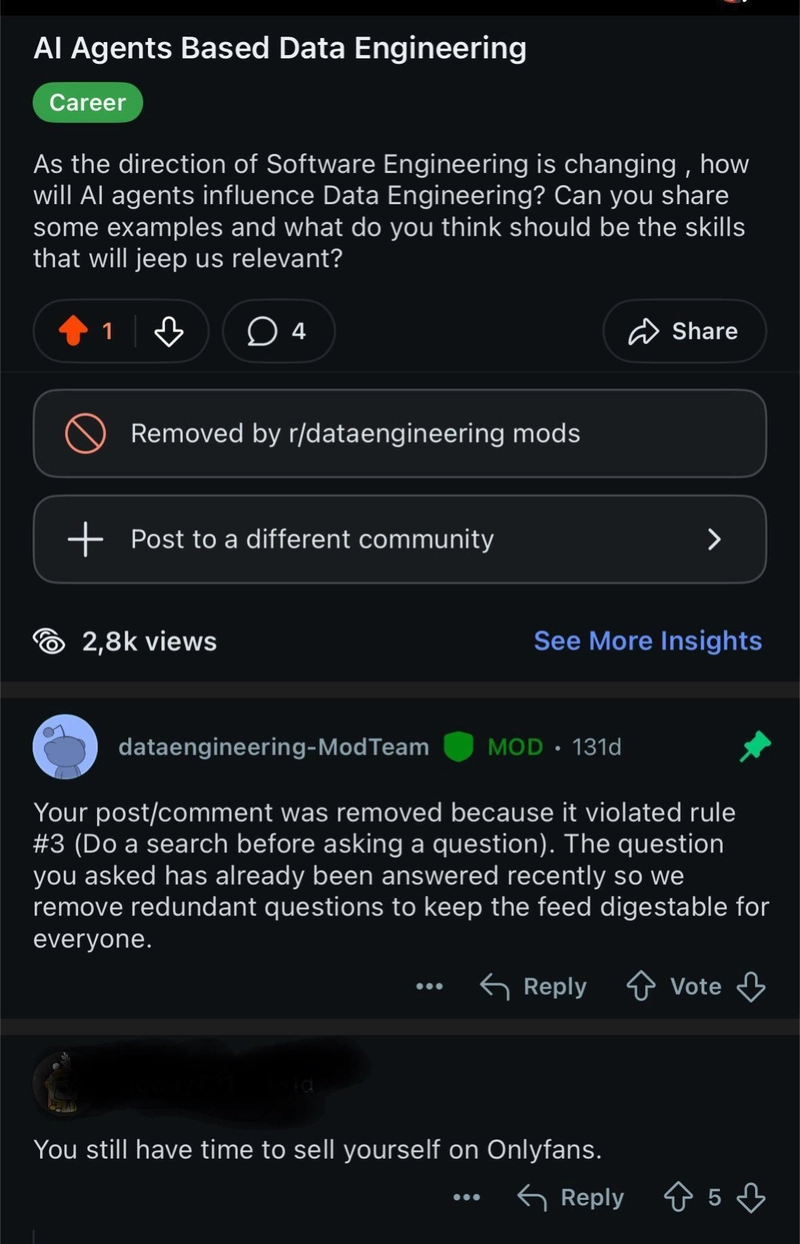

The Origin Story: When Reddit Roasts Spark Innovation

Picture this: You're a data engineer scrolling through Reddit, genuinely asking about emerging AI trends to stay ahead of the curve. You post a thoughtful question about what new technologies you should learn, expecting insights about MLOps, vector databases, or maybe the latest streaming frameworks.

Instead, you get: "You still have time to sell yourself on OnlyFans."

Most people would roll their eyes and move on. But sometimes, the most ridiculous comments spark the most interesting ideas. What if we took that sarcastic comment and turned it into a legitimate technical challenge? What if we built a sophisticated real-time data processing system that could handle the scale and complexity of a content platform, complete with an AI-powered deployment interface?

That's exactly what happened here, and the result is a fascinating exploration of modern data engineering architecture that combines LLM-powered DevOps automation with Apache Flink streaming processing.

The Technical Vision: Beyond the Meme

What started as a Reddit joke evolved into a comprehensive demonstration of cutting-edge data engineering patterns:

- Natural Language DevOps: Using OpenAI GPT-4 to parse deployment commands and automatically provision Apache Flink jobs

- Real-Time Stream Processing: Apache Flink jobs processing events with sub-second latency

- Modern Data Lakehouse: Apache Iceberg tables providing ACID transactions and schema evolution

- Event-Driven Architecture: Kafka-based event streaming with automatic scaling

- Infrastructure as Code: Complete Docker Compose orchestration for reproducible deployments

The system architecture demonstrates enterprise-grade patterns while maintaining the flexibility to experiment with emerging technologies.

System Architecture: Three Pillars of Modern Data Processing

1. Event Publisher: The Data Generator

┌─────────────────┐ ┌──────────────┐ ┌─────────────────┐

│ Go Publisher │───▶│ Redpanda │───▶│ Event Topics │

│ │ │ (Kafka) │ │ │

│ • GPU Temp Sim │ │ │ │ • content │

│ • Configurable │ │ • Multi-node │ │ • creator │

│ • Docker Ready │ │ • Web UI │ │ • temperature │

└─────────────────┘ └──────────────┘ └─────────────────┘

The first component simulates realistic event streams. While themed around content platforms, it's actually generating GPU temperature data - a perfect proxy for any time-series monitoring system. The publisher includes:

Smart Simulation Features:

- Configurable anomaly injection (5% abnormal readings by default)

- Multiple device simulation (scalable from 1 to N devices)

- Adjustable publishing intervals (milliseconds to minutes)

- Built-in Docker orchestration

Production-Ready Architecture:

type TemperatureReading struct {

DeviceID string `json:"device_id"`

Temperature float64 `json:"temperature"`

IsAbnormal bool `json:"is_abnormal"`

Timestamp time.Time `json:"timestamp"`

}

The publisher demonstrates real-world patterns for event generation, including proper error handling, graceful shutdowns, and configurable parameters through environment variables.

2. LLM-Powered Deployment Service: The AI Operations Layer

This is where things get interesting. Instead of traditional deployment scripts or complex CI/CD pipelines, the system uses OpenAI GPT-4 to interpret natural language commands and automatically deploy Apache Flink jobs.

┌─────────────────┐ ┌──────────────┐ ┌─────────────────┐

│ Chat Input │───▶│ OpenAI GPT │───▶│ Flink Jobs │

│ │ │ │ │ │

│ "deploy content │ │ • Parse NL │ │ • Auto Deploy │

│ event". │ │ • Validate │ │ • Docker/CLI │

│ │ │ • Generate │ │ • Monitoring │

└─────────────────┘ └──────────────┘ └─────────────────┘

Natural Language Processing Examples:

- "deploy content event processor" → Launches content stream processing job

- "I need creator analytics running" → Deploys creator event processor

- "start processing video events" → Spins up video content pipeline

Dual Deployment Strategies:

Docker-Based Deployment:

func (d *DockerClient) deployFlinkJob(eventType string) (*JobInfo, error) {

containerName := fmt.Sprintf("flink-%s-processor-%s",

eventType, time.Now().Format("20060102-150405"))

// Create container with automatic port assignment

config := &container.Config{

Image: "flink-event-processor:latest",

ExposedPorts: nat.PortSet{"8081/tcp": struct{}{}},

}

return d.createAndStartContainer(containerName, config)

}

CLI-Based Deployment:

func (f *FlinkClient) submitJob(eventType string) (*JobInfo, error) {

cmd := exec.Command("flink", "run",

"--jobmanager", f.config.JobManagerAddress,

"--class", "com.eventprocessor.FlinkStreamingJob",

f.config.JarPath,

"--event-type", eventType)

return f.executeWithMonitoring(cmd)

}

The service provides intelligent error handling, automatic retry logic, and comprehensive monitoring integration.

3. Flink Event Processor: The Stream Processing Engine

The heart of the system is a sophisticated Apache Flink application that processes multiple event types in real-time. This isn't a toy example - it's a production-ready streaming application with proper error handling, exactly-once processing guarantees, and multiple sink strategies.

┌─────────────────┐ ┌──────────────┐ ┌─────────────────┐

│ Kafka Source │───▶│ Flink Stream │───▶│ Iceberg Tables │

│ │ │ │ │ │

│ • Content Events│ │ • Transform │ │ • ACID Trans │

│ • Creator Events│ │ • Validate │ │ • Time Travel │

│ • Temp Events │ │ • Enrich │ │ • Schema Evolve │

└─────────────────┘ └──────────────┘ └─────────────────┘

Event Processing Architecture:

public class FlinkStreamingJob {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment

.getExecutionEnvironment();

// Configure for production

env.enableCheckpointing(30000); // 30-second checkpoints

env.getCheckpointConfig()

.setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

// Create event-specific processors

EventProcessorFactory factory = new EventProcessorFactory();

BaseEventProcessor processor = factory.createProcessor(eventType);

// Execute streaming pipeline

processor.buildPipeline(env).execute();

}

}

Multi-Event Support:

The system processes different event types with specialized handling:

// Content Events

public class ContentEvent {

private String id;

private String creatorId;

private String title;

private String contentType;

private BigDecimal price;

private Long viewCount;

private Boolean isLocked;

private List<String> tags;

// ... additional fields

}

// Creator Events

public class CreatorEvent {

private String id;

private String username;

private String displayName;

private Boolean isVerified;

private Long subscriberCount;

private BigDecimal monthlyPrice;

private String category;

// ... additional fields

}

Advanced Storage Integration:

The system supports multiple storage strategies, from simple file sinks to full Apache Iceberg integration:

public class IcebergTableManager {

public void createTable(String tableName, Schema schema) {

Table table = catalog.buildTable(TableIdentifier.of("default", tableName))

.withSchema(schema)

.withPartitionSpec(PartitionSpec.builderFor(schema)

.day("created_at")

.build())

.withProperty(TableProperties.FORMAT_VERSION, "2")

.create();

}

public DataStream<Row> createIcebergSink(DataStream<T> stream) {

return stream.sinkTo(

IcebergSinks.forRow(table, TableSchema.fromTypeInfo(typeInfo))

.build());

}

}

Key Technical Innovations

1. LLM-Powered Infrastructure Automation

The most fascinating aspect of this system is the natural language interface for infrastructure deployment. Instead of remembering complex CLI commands or navigating web UIs, operators can use conversational language:

User: "deploy creator event"

System: 🤖 Processing: deploy creator event

📋 Parsed command: deploy creator event

🚀 Submitting creator processing job...

✅ Successfully submitted creator processor job: flink-creator-processor-20240529-143022

🌐 Monitor at: http://localhost:8081

This demonstrates a powerful pattern for the future of DevOps: using LLMs to abstract away the complexity of infrastructure management while maintaining full control and visibility.

2. Hybrid Deployment Architecture

The system supports both containerized and traditional CLI-based deployments, providing flexibility for different operational environments:

- Docker Deployment: Perfect for development, testing, and containerized production environments

- CLI Deployment: Integrates with existing Flink clusters and traditional operational workflows

3. Modern Data Lakehouse Patterns

The Apache Iceberg integration showcases modern data lakehouse architecture:

- ACID Transactions: Ensuring data consistency even with concurrent writers

- Schema Evolution: Adding new fields without breaking existing queries

- Time Travel: Querying historical states of data

- Partition Management: Automatic daily partitioning for optimal query performance

Performance and Scalability Considerations

The system is designed with production scalability in mind:

Flink Configuration:

// Optimized for throughput

env.setParallelism(4);

env.getConfig().setLatencyTrackingInterval(1000);

// Memory management

Configuration config = new Configuration();

config.setString("taskmanager.memory.process.size", "2g");

config.setString("jobmanager.memory.process.size", "1g");

Kafka Integration:

// High-throughput consumer configuration

Properties properties = new Properties();

properties.setProperty("fetch.min.bytes", "1048576"); // 1MB

properties.setProperty("fetch.max.wait.ms", "500");

properties.setProperty("max.partition.fetch.bytes", "10485760"); // 10MB

Monitoring and Observability:

The system includes comprehensive monitoring:

- Flink Web UI for job monitoring and metrics

- Structured logging with configurable levels

- Docker container health checks

- Kafka consumer lag monitoring

Real-World Applications

While the "OnlyFans" theming is obviously humorous, the underlying architecture patterns are applicable to numerous real-world scenarios:

Content Platforms:

- Video streaming analytics

- User engagement tracking

- Content recommendation engines

- Creator monetization systems

IoT and Monitoring:

- Sensor data processing (the GPU temperature simulation)

- Infrastructure monitoring

- Anomaly detection systems

- Predictive maintenance

Financial Services:

- Transaction processing

- Risk assessment

- Fraud detection

- Regulatory reporting

E-commerce:

- User behavior analytics

- Inventory management

- Price optimization

- Recommendation systems

Lessons Learned and Technical Insights

1. LLM Integration Complexity

Integrating LLMs into operational systems requires careful consideration of:

- Error Handling: What happens when the LLM misinterprets a command?

- Cost Management: OpenAI API costs can accumulate quickly in production

- Latency: Adding an LLM call adds 1-3 seconds to deployment workflows

- Security: Ensuring the LLM can't be tricked into executing malicious commands

2. Multi-Language Microservices

The combination of Go (for the LLM service) and Java (for Flink processing) demonstrates the power of polyglot architectures:

- Go: Excellent for HTTP services, concurrent operations, and simple deployment

- Java: Rich ecosystem for data processing, mature Flink integration, robust type systems

3. Stream Processing Design Patterns

The Flink application showcases several important patterns:

- Factory Pattern: For creating event-specific processors

- Strategy Pattern: For different sink implementations (files vs. Iceberg)

- Builder Pattern: For configuring complex streaming pipelines

Future Enhancements and Roadmap

The system provides a solid foundation for several interesting extensions:

1. Advanced LLM Capabilities

- Multi-step Deployments: "Deploy a content processing pipeline with anomaly detection"

- Resource Optimization: LLM-driven resource allocation based on workload patterns

- Troubleshooting Assistant: AI-powered diagnosis of failing jobs

2. Enhanced Stream Processing

- Machine Learning Integration: Real-time feature engineering and model serving

- Complex Event Processing: Pattern detection across multiple event streams

- Auto-scaling: Dynamic parallelism adjustment based on throughput

3. Operational Excellence

- GitOps Integration: Version control for deployment configurations

- Multi-tenancy: Support for multiple teams and environments

- Advanced Monitoring: Custom metrics and alerting integrations

Conclusion: From Meme to Modern Architecture

What started as a sarcastic Reddit comment evolved into a legitimate exploration of cutting-edge data engineering patterns. The system demonstrates several important trends in modern data infrastructure:

- AI-Powered Operations: Using LLMs to simplify complex operational tasks

- Event-Driven Architecture: Building resilient, scalable systems around event streams

- Modern Data Lakehouse: Combining the flexibility of data lakes with the reliability of data warehouses

- Polyglot Microservices: Choosing the right tool for each specific task

The technical implementation showcases production-ready patterns while maintaining the experimental spirit needed to explore emerging technologies. It proves that sometimes the best innovations come from the most unexpected inspirations.

Whether you're building content platforms, IoT systems, or financial services, the architectural patterns demonstrated here provide a solid foundation for modern real-time data processing systems. And if nothing else, it's a reminder that great engineering can emerge from the most unlikely sources - even Reddit trolls.

Technologies Used:

- Apache Flink 1.17.1 (Stream Processing)

- Apache Iceberg 1.3.1 (Data Lakehouse)

- Apache Kafka / Redpanda (Event Streaming)

- OpenAI GPT-4 (Natural Language Processing)

- Go 1.21+ (LLM Service)

- Java 11+ (Stream Processing)

- Docker & Docker Compose (Orchestration)

Repository Links:

Built with ❤️ and a healthy sense of humor about Reddit comments.

Top comments (1)

Amazing read ! Inspiring Story !

Appati se hi avishkar ka janam hota hai :)