For many years, improving CPU performance meant increasing clock speed → allowing more cycles per second. But today, we’ve reached practical limits in how fast we can push frequency due to power, heat, and physical constraints.

As a result, modern CPU design focuses less on running faster and more on doing more per cycle. To achieve this, processors use three key architectural techniques:

- Superscalar execution

- SIMD (Single Instruction, Multiple Data)

- Multicore parallelism

Together, these allow a CPU to complete multiple operations in a single clock cycle → making better use of each tick without increasing the clock rate itself.

Before diving into these techniques, it’s important to understand CPU pipelining, the foundation of all modern CPU execution, which is covered in a separate article — CPU Pipelining: How Modern Processors Execute Instructions Faster.

Superscalar: Executing Multiple Instructions Per Cycle

A superscalar processor can issue and execute multiple instructions within a single clock cycle. This is achieved by replicating execution units (such as ALUs, FPUs, and load/store units) as illustrated in the diagram above, and by incorporating scheduling logic that performs several key functions :-

- Analyzing Dependencies Between Instructions

- Scheduling Independent Instructions Across Execution Units

- Register Renaming to Eliminate False Dependencies

- Reordering Instructions to Hide Stalls

This approach exploits instruction-level parallelism (ILP) → the presence of independent instructions within a single thread that can be executed simultaneously.

Superscalar Scheduling in Action

Consider the following simple code snippet:

int a = x + y; // Instruction 1

int b = m * n; // Instruction 2

a = p + q; // Instruction 3 (reuses 'a')

Here’s how a 2-way superscalar CPU handles this:

- Instruction 1 and Instruction 2 are independent and can be issued in parallel, assuming two ALUs are available.

-

Instruction 3 writes to

aagain. Although it doesn’t depend on Instruction 1, the reuse of the variable nameacould create a false write-after-write dependency. - To resolve this, the CPU uses register renaming to map each version of a to a different register:

-

a (from x + y)→ RegisterP1 -

b→ RegisterP2 -

a (from p + q)→ RegisterP3 - This allows Instruction 1 and Instruction 3 to be issued out of order or in parallel, without waiting on one another.

- If, for example,

x + ycauses a stall (e.g., due to a cache miss), the CPU can reorder execution and run Instruction 2 or Instruction 3 first → keeping the pipeline active.

-

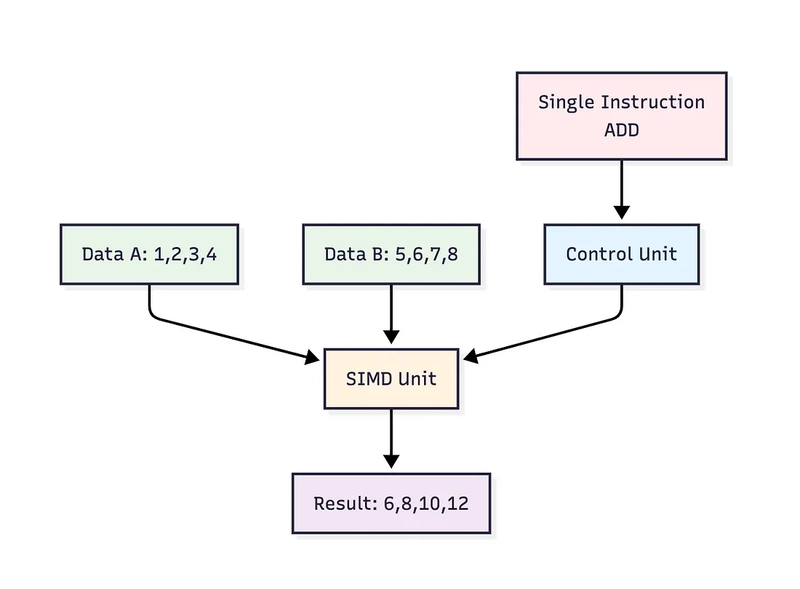

SIMD: Applying One Instruction to Multiple Data Elements

SIMD (Single Instruction, Multiple Data) allows a single instruction to operate on multiple values at once. This is ideal for vector math, graphics, or matrix processing, where the same operation repeats across arrays. This exploits data-level parallelism (DLP) → applying the same instruction to many data points.

// Pseudo-vectorized addition using SIMD

float a[4] = {1.0, 2.0, 3.0, 4.0};

float b[4] = {10.0, 20.0, 30.0, 40.0};

float c[4];

for (int i = 0; i < 4; i++) {

c[i] = a[i] + b[i];

}

A SIMD instruction can perform all 4 additions in one CPU instruction.

Multicore: Running Multiple Threads in Parallel

A multicore processor has multiple independent cores, each capable of executing its own thread. Threads may come from the same program (multithreading) or different programs (multiprocessing). This exploits thread-level parallelism (TLP) → running independent streams of instructions in parallel.

All Three Combined: Parallelism at Every Level

Modern CPUs combine superscalar, SIMD, and multicore techniques to maximize throughput per cycle. This allows multiple threads to run across cores, with each core executing multiple instructions per cycle, and each instruction operating on multiple data values.

Example:

A CPU with:

- 4 cores (multicore),

- each capable of issuing 4 instructions per cycle (superscalar),

- and supporting 256-bit SIMD (processing 8 floats at once)

can potentially perform: 4 cores × 4 instructions × 8 data elements = 128 operations per cycle.

If you have any feedback on the content, suggestions for improving the organization, or topics you’d like to see covered next, feel free to share → I’d love to hear your thoughts!

Top comments (0)