This can then be converted into an integer between 0 and 15 in the obvious way. Do this for all the pixels, and the result can then be interpreted as a greyscale image, with 16 levels. Ok, but this conversion necessarily depends on the order in which the neighboring pixels (above, left, below, right) are taken. So why does the documentation say that the "uniform" setting is rotation invariant?

What you're describing is the way that method='default' works. The method='default' approach assigns a weight of 2 ** i to each point, so it matters what position the point is in. You're correct that this is not invariant to order. However, method='uniform' does something different.

What uniform does is the following process:

- First, compare each sampled point and compare it to the center point. For each sampled point, record a value in the

signed_texture array. This value is 1 if the point is greater than or equal to the center point, and 0 otherwise.

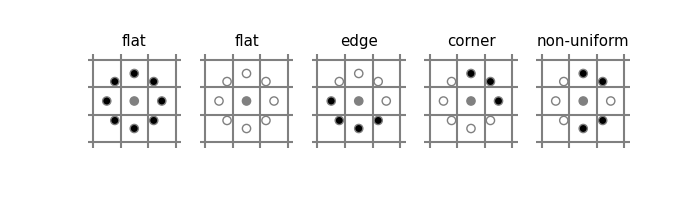

- Check if the point is uniform. This is defined as less than 3 changes between 0 and 1 while iterating over the

signed_texture array.

- If the point is uniform, output the sum of the

signed_texture array. This will be a number between 0 and P inclusive.

- Otherwise, if the point is non-uniform, output P + 1.

Since the number of transitions between 0 and 1 is rotation invariant, and the count of how many points are greater than the center point is also rotation invariant, the result is rotation invariant.

Below is a program that computes LBP using a simplified, pure Python, algorithm.

from skimage import data

from skimage.feature import local_binary_pattern

import numpy as np

import matplotlib.pyplot as plt

plt.gray()

image = data.brick()

def get_texture_values(image, r, c, rows, cols, offsets):

"""Given an image and a central point, sample points around that point."""

texture = []

for offset in offsets:

offset_r, offset_c = offset

offset_r_abs = offset_r + r

offset_c_abs = offset_c + c

if not (0 <= offset_r_abs < rows):

texture.append(0)

elif not (0 <= offset_c_abs < cols):

texture.append(0)

else:

texture.append(image[offset_r_abs, offset_c_abs])

return texture

def uniform(signed_texture, P):

# This gets run once per pixel

changes = 0

for i in range(P - 1):

changes += (signed_texture[i] - signed_texture[i + 1]) != 0

result = 0

if changes <= 2:

for i in range(P):

result += signed_texture[i]

else:

result = P + 1

return result

def default(signed_texture, P):

# This gets run once per pixel

result = 0

for i in range(P):

result += signed_texture[i] * 2 ** i

return result

def lbp_simplified(image, method='uniform'):

rows, cols = image.shape

P = 4 # Note: if you change this you must change offsets as well

# Note: the actual local_binary_pattern allows fractional offsets

# by doing bilinear interpolation. For simplicity, this has been

# ignored.

offsets = [

[0, 1],

[-1, 0],

[0, -1],

[1, 0],

]

ret = np.zeros_like(image, dtype='float64')

method_func = {

'uniform': uniform,

'default': default,

}[method]

for r in range(rows):

for c in range(cols):

value_here = image[r, c]

texture = get_texture_values(image, r, c, rows, cols, offsets)

signed_texture = [int(texture_value >= value_here) for texture_value in texture]

ret[r, c] = method_func(signed_texture, P)

return ret

# Check if lbp_simplified(image, method='uniform') is correct

reference_output = local_binary_pattern(image, P=4, R=1, method='uniform')

assert np.allclose(lbp_simplified(image, method='uniform'), reference_output)

# Plot uniform

plt.title("LBP uniform")

plt.imshow(lbp_simplified(image, method='uniform'))

plt.colorbar()

plt.show()

# Check if lbp_simplified(image, method='default') is correct

reference_output = local_binary_pattern(image, P=4, R=1, method='default')

assert np.allclose(lbp_simplified(image, method='default'), reference_output)

# Plot default

plt.title("LBP default")

plt.imshow(lbp_simplified(image, method='default'))

plt.colorbar()

plt.show()

The full details of how this works can be found here.

So why does local binary pattern return an image?

Essentially, this program evaluates default() or uniform() on each individual pixel, and the result of this is a number. The result is an array of the same size as the original image. This can be interpreted as an image.