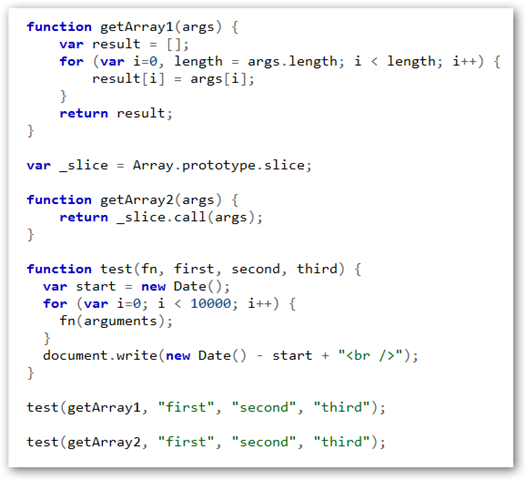

I was redirected here from another question about shallow cloning Arrays, and after finding out that most of the links are either dead, outdated, or broken, figured I'd post a solution that you can just run in your own environment.

The following code should semi-accurately measure how much time it takes to clone an array using specific ways. You can run it in your Browser directly using the Developer console or Node.JS. The latest version of it can always be found here.

function benchTime(cycles, timeLimit, fnSetup, ...fnProcess) {

function measureCycle(timeLimit, fn, args) {

let tmp = null;

let end, start = performance.now();

let iterations = 0;

// Run until we exceed the time limit for one cycle.

do {

tmp = fn.apply(null, args);

end = performance.now();

++iterations;

} while ((end - start) <= timeLimit);

tmp = undefined;

// Build a result object and return it.

return {

"iterations": iterations,

"start": start,

"end": end,

"duration": end - start,

"opsPerSec": (iterations / (end - start)) * 1000.0,

};

}

console.log(`Measuring ${fnProcess.length} functions...`);

let params = fnSetup();

//console.log("Setup function returned:", params);

// Perform this for every function passed.

for (let fn of fnProcess) {

let results = [];

console.groupCollapsed(`${fn.name}: Running for ${cycles} cycles...`);

// Perform this N times.

for (let cycle = 0; cycle < cycles; cycle++) {

let result = {

"iterations": Number.NaN,

"start": Number.NaN,

"end": Number.NaN,

"duration": Number.NaN,

"opsPerSec": Number.NaN,

};

try {

result = measureCycle(timeLimit, fn, params);

results.push(result);

} catch (ex) {

console.error(`${fn.name}:`, ex);

break;

}

console.log(`Cycle ${cycle}/${cycles}: ${result.iterations}, ${result.end - result.start}, ${result.opsPerSec} ops/s`);

}

// If we have more than 3 repeats, drop slowest and fastest as outliers.

if (results.length > 3) {

console.log("Dropping slowest and fastest result.");

results = results.sort((a, b) => {

return (a.end - a.start) > (b.end - b.start);

}).slice(1);

results = results.sort((a, b) => {

return (a.end - a.start) < (b.end - b.start);

}).slice(1);

}

console.groupEnd();

// Merge all results for the final average.

let iterations = 0;

let totalTime = 0;

let opsPerSecMin = +Infinity;

let opsPerSecMax = -Infinity;

let opsPerSec = 0;

for (let result of results) {

iterations += result.iterations;

totalTime += result.duration;

opsPerSec += result.opsPerSec;

if (opsPerSecMin > result.opsPerSec) {

opsPerSecMin = result.opsPerSec;

}

if (opsPerSecMax < result.opsPerSec) {

opsPerSecMax = result.opsPerSec;

}

}

let operations = opsPerSec / results.length; //iterations / totalTime;

let operationVariance = opsPerSecMax - opsPerSecMin;

console.log(`${fn.name}: ${(operations).toFixed(2)}±${(operationVariance).toFixed(2)} ops/s, ${iterations} iterations over ${totalTime} ms.`);

}

console.log("Done.");

}

function spread(arr) { return [...arr]; }

function spreadNew(arr) { return new Array(...arr); }

function arraySlice(arr) { return arr.slice(); }

function arraySlice0(arr) { return arr.slice(0); }

function arrayConcat(arr) { return [].concat(arr); }

function arrayMap(arr) { return arr.map(i => i); }

function objectValues(arr) { return Object.values(arr); }

function objectAssign(arr) { return Object.assign([], arr); }

function json(arr) { return JSON.parse(JSON.stringify(arr)); }

function loop(arr) { const a = []; for (let val of arr) { a.push(val); } return a; }

benchTime(

10, 1000,

() => {

let arr = new Array(16384);

for (let a = 0; a < arr.length; a++) { arr[a] = Math.random(); };

return [arr];

},

spread,

spreadNew,

arraySlice,

arraySlice0,

arrayConcat,

arrayMap,

objectValues,

objectAssign,

json,

loop

);

I've run this for a few sizes, but here's a copy of the 16384 element data:

| Test |

NodeJS v20.12.2 |

Firefox v127.0b2 |

Edge 124.0.2478.97 |

| spread |

13.180±1.022 ops/ms |

7.436±1.110 ops/ms |

3.321±0.239 ops/ms |

| spreadNew |

4.727±0.532 ops/ms |

1.010±0.022 ops/ms |

21.045±2.982 ops/ms |

| arraySlice |

12.912±2.127 ops/ms |

11046.737±237.575 ops/ms |

494.359±32.726 ops/ms |

| arraySlice0 |

13.192±0.477 ops/ms |

10665.299±500.553 ops/ms |

492.209±66.837 ops/ms |

| arrayConcat |

16.590±0.656 ops/ms |

7923.657±224.637 ops/ms |

476.975±112.053 ops/ms |

| arrayMap |

6.542±0.301 ops/ms |

32.960±3.743 ops/ms |

52.127±3.472 ops/ms |

| objectValues |

4.339±0.111 ops/ms |

10840.392±619.567 ops/ms |

115.369±3.217 ops/ms |

| objectAssign |

0.270±0.013 ops/ms |

10471.860±202.291 ops/ms |

83.135±3.439 ops/ms |

| json |

0.205±0.039 ops/ms |

4.014±1.679 ops/ms |

7.730±0.319 ops/ms |

| loop |

6.138±0.287 ops/ms |

6.727±1.296 ops/ms |

27.691±1.217 ops/ms |

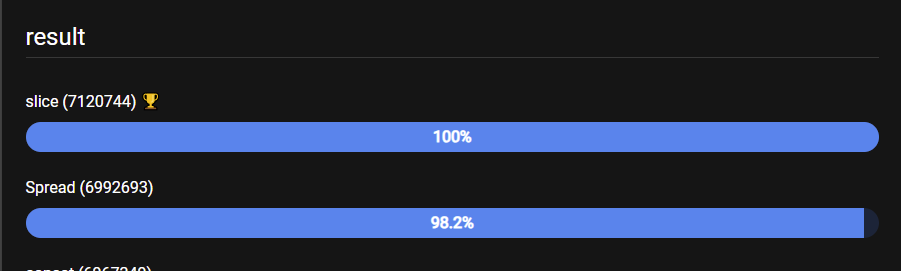

Overall, it appears as if the following is true:

- (arraySlice)

array.slice(), (arraySlice0)array.slice(0) and (arrayConcat)[].concat(array) are equivalently fast. arraySlice appears to be minimally faster.

- In Chromium-based Browsers only, (objectAssign)

Object.assign([], array) is faster with massive arrays, but brings the downside of eliminating any entries that are undefined.

- The worst ways overall to shallow-clone arrays are (spreadNew)

New Array(...array), (arrayMap)array.map(i => i), (objectValues)Object.values(array), (objectAssign)Object.assign(array), (json)JSON.parse(JSON.stringify(array), (loop)let arr = new Array(); for (let e of array) { arr.push(e); }; return arr; }.

- Firefox appears to be optimizing in a way that outright looks like cheating. I've failed to identify a way to make Firefox look normal.

Disclaimer: These results are from my personal system, and I reserve the right to have made an error in my code.

const getInitialArray = () => {return [[1, 2], [3, 4]}