AI chatbots have taken the world by storm—and leading the charge is OpenAI’s ChatGPT. But as powerful as it is, ChatGPT comes with limitations: it runs on the cloud, raises privacy concerns, and isn’t open source.

Whether you're a developer looking to run AI locally, a researcher experimenting with models, or someone just tired of hitting usage limits, you're in luck. The open-source AI world has been booming with local alternatives that give you full control over your chatbot experience—offline, private, and hackable.

💡 Looking for a Postman replacement before diving into local LLMs?

Check out Apidog — an all-in-one platform for API design, testing, mocking, and documentation. It combines the power of Postman + Swagger, works on web & desktop, and offers a generous free tier—perfect for developers building and testing LLM apps locally.

In this article, we’ll explore 10 of the best open-source ChatGPT alternatives you can run 100% locally on your own machine or server.

Why Run AI Locally?

So… why go through the trouble of running a ChatGPT-style model on your own machine when cloud services already work out of the box?

Here are a few good reasons developers and AI enthusiasts are making the switch to local:

- Full Data Privacy: When you run locally, your inputs, outputs, and prompts stay entirely on your device. That means no accidental data leaks, no third-party analytics, and no vague “we may use your input to improve our models” clauses.

- Offline Access: No internet? No problem. Local tools let you generate responses, code, or content even if you’re on a plane, off-grid, or working in a secure environment.

- Open Source and Hackability: Most of the tools on this list are completely open source. That means you can read the code, fork the repo, make changes, and even contribute back.

- Faster Iteration for Developers: If you’re building something on top of a language model, working locally can significantly speed up your development loop.

- Cost Savings Over Time: Local models may require some upfront setup or hardware resources, but if you're frequently using LLMs, running them locally can save serious money in the long run—especially compared to high-usage tiers on commercial platforms.

ChatGPT open source alternatives

1. Gaia by AMD

Gaia is a brand-new, open-source project from AMD that lets you run LLMs entirely on your Windows PC, with or without specialized hardware like Ryzen AI chips. It stands out for its straightforward setup and built-in RAG (Retrieval-Augmented Generation) capabilities—ideal if you want models that can reason over your local data.

Key Features:

- Runs fully locally using the Lemonade SDK from ONNX, with performance optimizations for Ryzen AI processors.

Includes four built-in agents:

- Simple Prompt Completion for basic interactions

- Chaty, a standard chat agent

- Clip, for YouTube Q&A

- Joker, for lighthearted fun

- RAG support via a local vector database, enabling grounded and context-aware responses

Two installer options:

- Mainstream installer for any Windows PC

- Hybrid installer optimized for Ryzen AI hardware

- Offers improved security, low latency, and true offline functionality.

Ideal for:

Windows users who want a powerful, offline-capable LLM assistant—especially those with Ryzen AI hardware, but it works well on any modern PC.

2. Ollama + LLaMA / Mistral / Gemma

URL: https://ollama.com

Ollama is a sleek local runtime for large language models (LLMs) like Meta's LLaMA, Mistral, and Google’s Gemma. It abstracts the complexity of running large models by offering a Docker-like CLI to download, run, and chat.

Why it's great:

- Simple CLI and desktop interface

- Supports multiple open-source models (LLaMA, Mistral, Code LLaMA)

- Fast local inference, even on MacBooks with Apple Silicon

Ideal for: Anyone wanting a no-fuss way to run LLMs locally

3. LM Studio

URL: https://lmstudio.ai

License: MIT

LM Studio is a local GUI application for chatting with LLMs. It supports any GGUF model from Hugging Face or TheBloke and runs inference locally with no internet connection required.

Why it's great:

- Beautiful and intuitive desktop UI

- Easy to import models via drag-and-drop

- Local history and multi-model switching

Ideal for: Non-technical users, developers who want a GUI without the terminal

4. LocalAI

URL: https://github.com/go-skynet/LocalAI

LocalAI is like OpenAI’s API—but fully local. It provides a drop-in replacement for OpenAI-compatible APIs, so you can run your own GPT-like model and use it in apps built for ChatGPT.

Why it's great:

- Fully API-compatible with OpenAI

- Easily deployable with Docker

- Runs GGUF and ONNX models

Ideal for: Developers looking to integrate LLMs into their apps with full control

5. Text Generation Web UI (oobabooga)

URL: https://github.com/oobabooga/text-generation-webui

This tool is a Swiss Army knife for running local LLMs with a full web interface, plugin support, chat history, and more. It supports models like Vicuna, Mistral, Falcon, and others in multiple formats.

Why it's great:

- Feature-rich with chat, instruction, and roleplay modes

- Plugin system for extensions like voice-to-text, memory, and embeddings

- Community-driven and highly customizable

Ideal for: Advanced users and tinkerers

6. PrivateGPT

URL: https://github.com/imartinez/privateGPT

PrivateGPT is built for those who want a fully offline AI chatbot that can even answer questions about your documents without an internet connection. It combines local LLMs with RAG (Retrieval Augmented Generation) features.

Why it's great:

- Totally private, with no API calls

- Drop in your PDFs or DOCs and ask questions

- Great for legal, academic, and corporate users

Ideal for: Data-sensitive users, legal teams, researchers

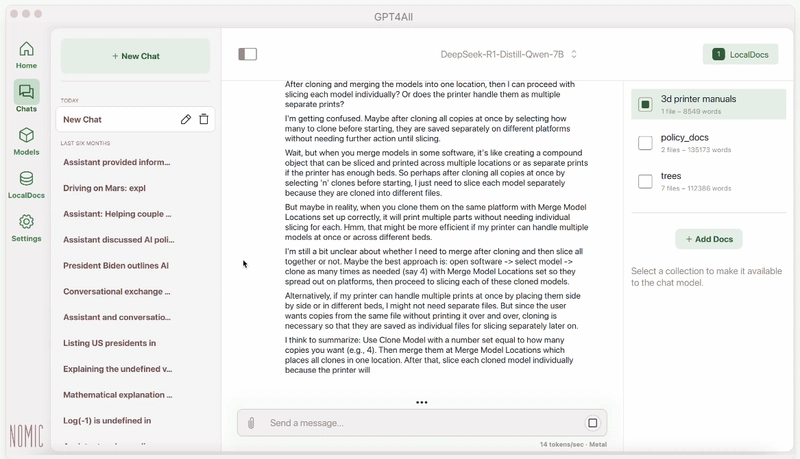

7. GPT4All

URL: https://gpt4all.io

GPT4All by Nomic AI offers a simple GUI to interact with multiple open-source LLMs on your laptop or desktop. It focuses on smaller, performant models that run well on consumer hardware.

Why it's great:

- Easy one-click installation

- Wide range of supported models (LLaMA, Falcon, etc.)

- Works on Windows, macOS, and Linux

Ideal for: Newbies or developers who want a plug-and-play local LLM

8. Jan (formerly gpt-terminal)

URL: https://github.com/adamyodinsky/TerminalGPT

Jan is an open-source AI assistant designed to run locally with a beautiful macOS-style desktop UI. It supports multiple LLM backends and also provides code assistance.

Why it's great:

- Sleek and responsive UI

- Focused on usability and offline privacy

- Works with Ollama and Hugging Face models

Ideal for: Mac users, designers, and privacy-conscious coders

9. Hermes / KoboldAI Horde

URL: https://github.com/KoboldAI/KoboldAI-Client

Originally built for AI storytelling, KoboldAI supports many open models and works great for dialogue generation, story creation, and roleplay. It can also be used like ChatGPT with the right settings.

Why it's great:

- Tailored for storytelling and dialogue

- Works offline with GGUF and GPT-J based models

- Supports collaborative model usage via Horde network

Ideal for: Writers, fiction creators, hobbyists

10. Chatbot UI + Ollama Backend

URL: https://github.com/mckaywrigley/chatbot-ui

If you love the ChatGPT interface, this is for you. Chatbot UI is a sleek frontend that mimics ChatGPT but can be hooked up to your local Ollama, LocalAI, or LM Studio server.

Why it's great:

- Beautiful ChatGPT-style UI

- Local deployment with backend flexibility

- Self-hosted and configurable

Ideal for: Devs who want a private ChatGPT clone at home

Final Thoughts

AI doesn’t have to live in the cloud. With the rise of open source tools and local-first development, it’s easier than ever to bring ChatGPT-style experiences to your own device—without giving up control or privacy.

Whether you’re a developer experimenting with LLMs, a researcher wanting reproducibility, or someone who just prefers their AI without the surveillance, there’s a local tool out there for you. From lightweight desktop apps to fully customizable self-hosted setups, the options are growing fast—and getting more powerful every month.

Open source gives you freedom: to tweak, to learn, to contribute, and to build something that works exactly the way you want it to. And honestly, that’s what makes this space so exciting.

If you’ve been on the fence about ditching cloud AI, maybe now’s the time to try one of these local alternatives. You might be surprised how far open source has come.

Top comments (29)

That's a good one, Emmanuel! Nice to know local-run, privacy-first LLM chatbots!

You’re welcome. Glad you like the list.

Ollama is my favourite! I use it with Open WebUI

Oh yeah. Ollama is really a good one

Another cool list, thx Emmanuel!

You’re welcome.

Awesome list! I learned a lot of tools from your articles. Thank you.

Absolutely loving how many solid local LLM options have popped up lately - offline control is a game changer for privacy. Which of these tools has been the most stable for you in daily use?

I would go for Ollama any day.

If you're looking to build an internal, chat-based knowledge base with AI agents on top of your own data, I can also recommend Enthusiast.

It says it's for e-commerce but it's suitable to any knowledge-heavy use case where you need private, RAG-powered automation.

Curious to hear if anyone else here is building with RAG + agents. Happy to exchange ideas.

If you want something solid but not too complicated, GPT-NeoX is a great pick. It's powerful, open-source, and gets you a lot of what ChatGPT offers, all running right on your machine. But if you’re after something a little lighter, GPT-J is a nice alternative. It’s also open-source and doesn’t require a super beefy setup to work well.

At the end of the day, it really comes down to what your system can handle and what you need. These models each have their sweet spots, so it’s all about finding the one that fits your project.

Do you need quick and reliable hacker service

Do you need to catch or hack your cheating spouse

Do you need to read your cheating spouse text message and more contact,

Henryclarkethicalhacker at g mail com

It may be a traumatic experience to the victim of an online investment fraud, however preventing & protecting oneself against any possible scams in the future involves the improvement of one’s financial knowledge & the safety protocols of the reliable asset recovery platform whom he/she has chosen . Become aware with basic tips that may help one avoid being conned, like attractively high and low risk assurance of high returns and high pressure to invest. In my time of despair & regrets,I had no guidance or article that suggested ‘hackers’ as a way out, so if I were you I’d consider this piece of information ‘vital’. In spite of the emotional & financial damage I battled with mentally, i still managed to make a legal report to the cryptocurrency regulatory authority within my jurisdiction; who then pointed me to “VALOR HACK RECOVERY TEAM ” direction as an off the book guaranteed solution to my report.

Contact info:

Msty deserves a shout (msty.app). Great UI, lots of features. Can run models locally or use a remote model provider

Some comments may only be visible to logged-in visitors. Sign in to view all comments.