ChatGPT has become a popular tool for generating human-like responses to a wide range of prompts, but every interaction with it involves considerable backend processing. While the convenience of AI comes at our fingertips, it's worth taking a moment to understand the resource costs behind each query. Here's a detailed breakdown of what goes into answering a single prompt:

⚡ Electricity Consumption

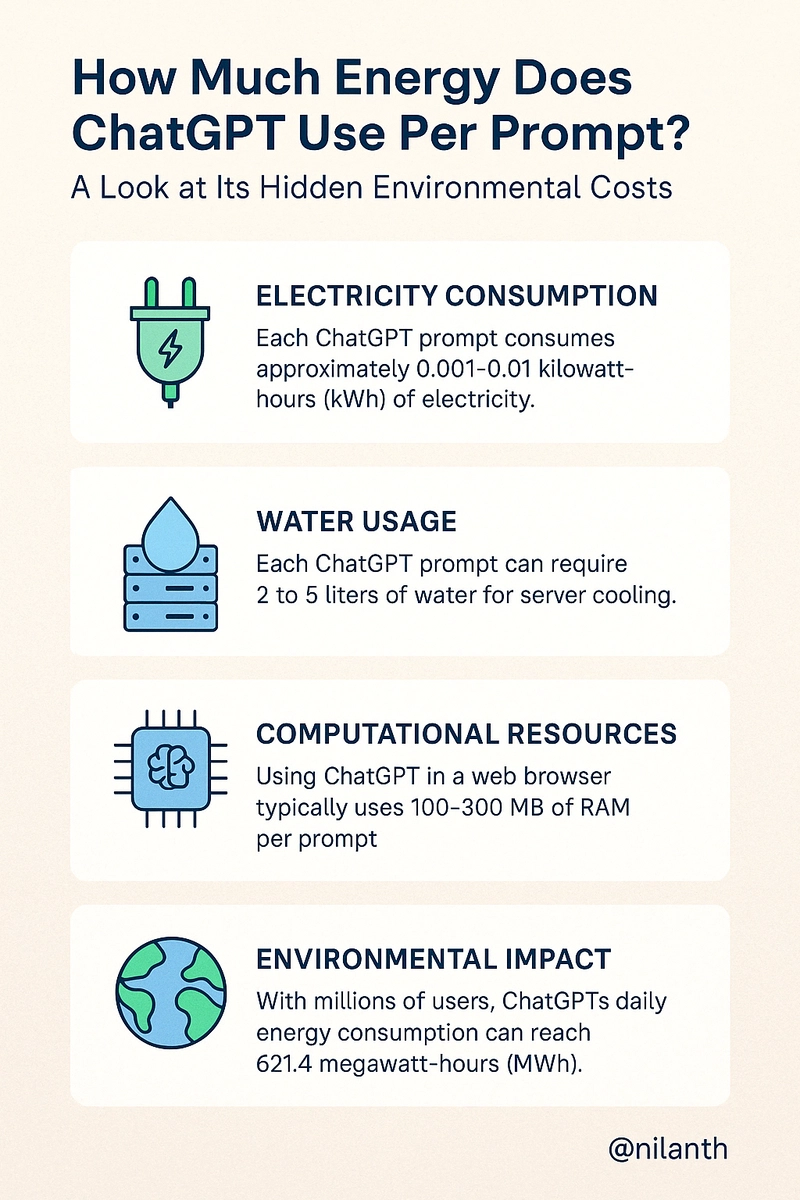

Each query to ChatGPT consumes approximately 0.001 to 0.01 kilowatt-hours (kWh) of electricity. The exact number varies depending on the model size, prompt complexity, and server efficiency.

To put this into perspective, this is roughly 10 to 15 times the energy consumed by a standard Google search.

💧 Water Usage

Data centers need cooling systems, and many of these systems rely on water. It's estimated that each ChatGPT prompt consumes around 2 to 5 liters of water due to the cooling requirements of the servers processing the queries.

🧠 Computational Resources on the User Side

On your personal device, interacting with ChatGPT through a browser typically uses 100–300 MB of RAM, depending on your session length, tab usage, and input complexity.

🌍 Environmental Impact at Scale

Given the popularity of ChatGPT, these seemingly small resource costs add up quickly. With around 200 million queries daily, the global energy consumption could reach approximately 621.4 megawatt-hours (MWh) per day.

🔍 What Affects Resource Usage Per Prompt?

Several factors influence how much energy and resources are consumed:

- Prompt Complexity: More detailed prompts require more compute time.

- Model Size: Larger models like GPT-4 consume significantly more energy than lighter models.

- Server Efficiency: The data center's technology and cooling systems also impact resource use.

✅ Tips to Reduce Your Environmental Footprint

While AI usage inherently consumes resources, there are a few small steps users can take to help:

- Be Concise: Frame your queries clearly and efficiently.

- Limit Redundant Prompts: Avoid splitting queries unnecessarily.

- Use Off-Peak Hours: This reduces strain on the system and can improve server efficiency.

Final Thoughts

As AI becomes more integrated into our daily lives, it's essential to stay informed about its environmental costs. By understanding the energy, water, and computational power behind each ChatGPT prompt, we can all make more conscious decisions about how we use AI responsibly.

📚 Sources

- Kanoppi.co – AI vs Search Engine Energy Use

- Medium – Energy Comparison of ChatGPT vs Google

- RWDigital – How Much Energy Do Google Search and ChatGPT Use?

- AP News – AI’s Thirst for Water

- BusinessEnergyUK – ChatGPT’s Water Consumption

- OpenAI Community – Browser RAM Usage Thread

- Balkan Green Energy News – ChatGPT's Annual Energy Comparison

- Forbes – AI and Sustainability Challenges

Top comments (9)

kinda wild seeing how much power and water this stuff actually takes - i always assumed it was just clicks and code but way more goes in under the hood tbh. you think people would change how they use it if they saw these numbers every time?

I made a quick bookmarket based on the numbers in the post.

I can imagine how much consumption a platform like YouTube can have 👀

The problem there is that while video codecs are becoming better at reducing the image sizes, the resolution get higher because we want the best picture possible. It is fighting against windmills.

It was not long ago that even tv's didn't mention the picture size. Once marketing took a hold of the concept, all floodgates were opened.

Thanks for sharing. It’s insightful. Here’s what ChatGPT itself has to say:

It references e.g. this paper from 2025 which states:

The resources to train GPTs are significant, that could be a separately interesting set of numbers to highlight perhaps.

And as an aside, from this I’ve also learned that data centers apparently tend to evaporate their water, i.e. it goes to the atmosphere. I had a misconception in my mind that data centers would usually recycle the water, but that’s not the case it seems.

I really appreciate how you explained the costs and energy consumption associated with each prompt.

I had always assumed that simply providing a prompt would automatically generate a response, but I now realize much more is happening behind the scenes.

We need to pay attention to the environmental costs as well.

Thank you for offering this valuable insight!

It always amazes me how our minds operate at just a fraction of the cost, far lower than GPT systems.

There's a TCO thing working here too, though. The water costs in the article are probably an average of prompt cost and training cost over the lifetime of the model, divided by number of prompts. That's a guess to explain why they're higher than other articles like this.

If you did some similar calculations for Bert sitting in a booth answering questions at a carnival, you'd need to include the water used by all his ancestors in the process of evolving his mind, and the schools in training it.

That number of 621.4 megawatt hours sounds like a lot, but I don’t really know. How does it compare to the daily power usage from a data center? An average household?

Some comments may only be visible to logged-in visitors. Sign in to view all comments.