CI/CD and DevOps in Snowflake (Part 1): A Comprehensive Overview of Features and Tools

- Date

Tomáš SobotíkSenior Data Engineer & Snowflake SME at Norlys

Tomáš SobotíkSenior Data Engineer & Snowflake SME at Norlys

Building a data warehouse—or more generally, various data products—is becoming increasingly similar to standard software development. This shift means that applying principles from the software development lifecycle is not only possible, but in many cases necessary for data projects, just as it has been essential in software engineering for years. This blog post focuses on DevOps in Snowflake. Snowflake has made tremendous progress in this area in recent years, introducing more and more features that enable teams to manage data projects following DevOps principles—or, more precisely, DataOps principles.

In this first part, we'll explore the DevOps-related capabilities Snowflake offers. The next part will dive into practical implementation, showing how to build CI/CD pipelines and deploy Snowflake infrastructure. But first, let’s start with a brief introduction to the key concepts: DevOps and DataOps.

What is DevOps?

DevOps is all about bringing development and operations together to build, test, and release software faster and more reliably. It centers around automation, collaboration, and minimizing the risk of failures when software reaches production. It’s a methodology that combines best practices from multiple areas with a clear goal: automate everything possible and cover all parts of the process. Code should be built, deployed, and tested as often as needed. DataOps applies that same mindset to data teams. Instead of just managing application code, DataOps is about treating data pipelines, transformations, and analytics like software—versioned, tested, and deployed automatically. In short, it’s DevOps for the data world. Snowflake already offers a number of features that can be combined to build automated data pipelines and manage infrastructure deployment. Let’s take a closer look at these features in detail.

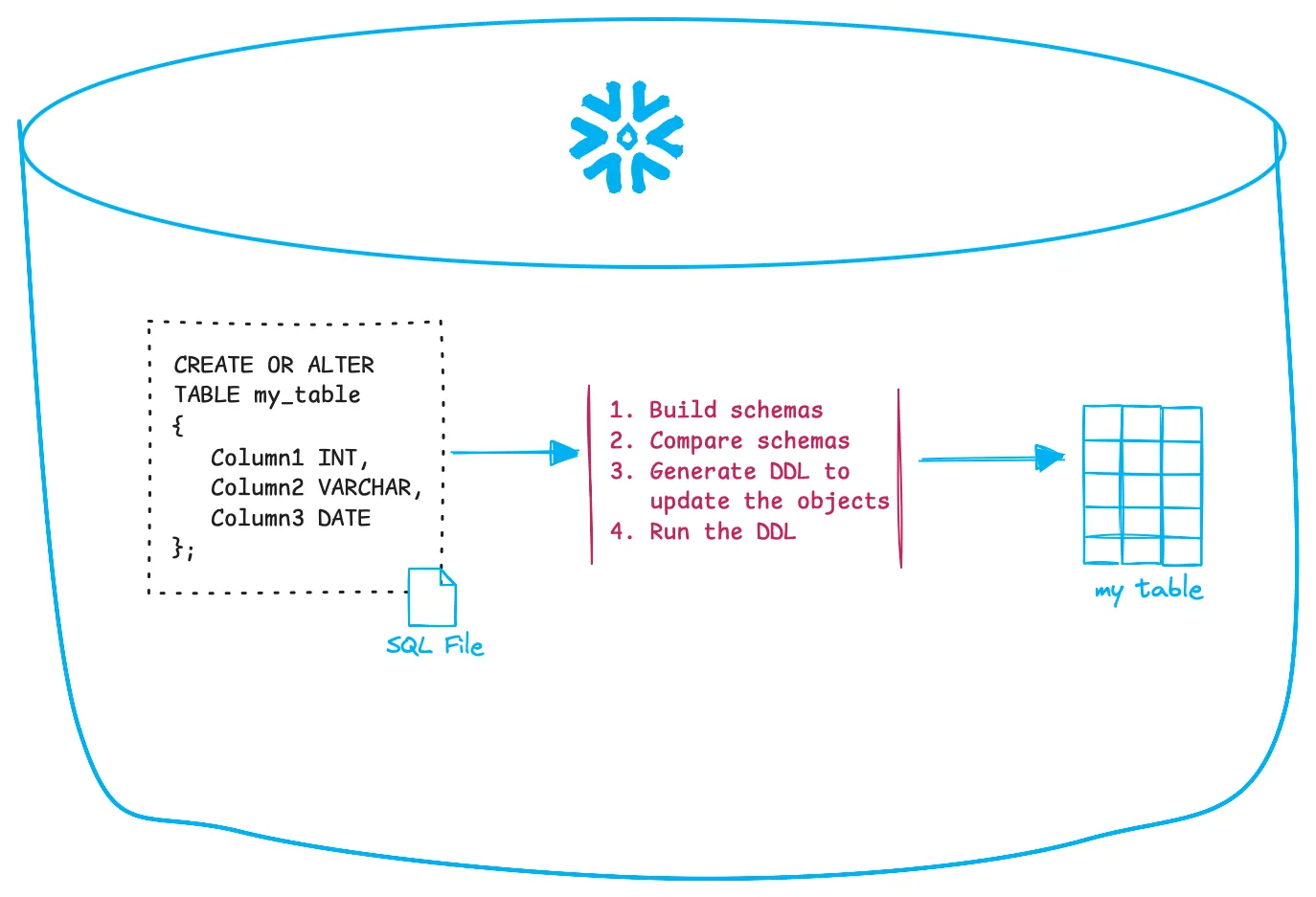

Declarative Change Management

One of the first things you need to address is defining your Snowflake or database infrastructure using code. Snowflake provides a feature called CREATE OR ALTER for this purpose. It allows you to define Snowflake objects declaratively. Declarative means you don’t need to manage versioning or apply changes incrementally—you simply define the desired final state. For example, you specify how a table schema, task, or view should look, and Snowflake takes care of the rest. It automatically compares the current state with your definition and applies only the necessary changes behind the scenes.

CREATE OR ALTER

With CREATE OR ALTER, you simply write a DDL script using this keyword, and Snowflake ensures that after execution, your object matches the defined state—without needing to recreate it. This is especially important for objects like tables, where dropping and recreating them to change the schema (e.g., adding or removing a column) could result in data loss. Instead, CREATE OR ALTER preserves the existing state and applies only the necessary changes.

At a high level, CREATE OR ALTER does the following:

- Compares the script with the current state in the database

- Generates the necessary DDL statements to update the object

- Executes those statements

Snowflake already supports a wide range of database and account-level objects that can be defined using CREATE OR ALTER, including:

- Warehouse

- Database

- Schema

- Table

- View

- Stage

- Role

- Database Role

- Application

- Function

- External Function

- Procedure

⠀...and more are being added regularly!

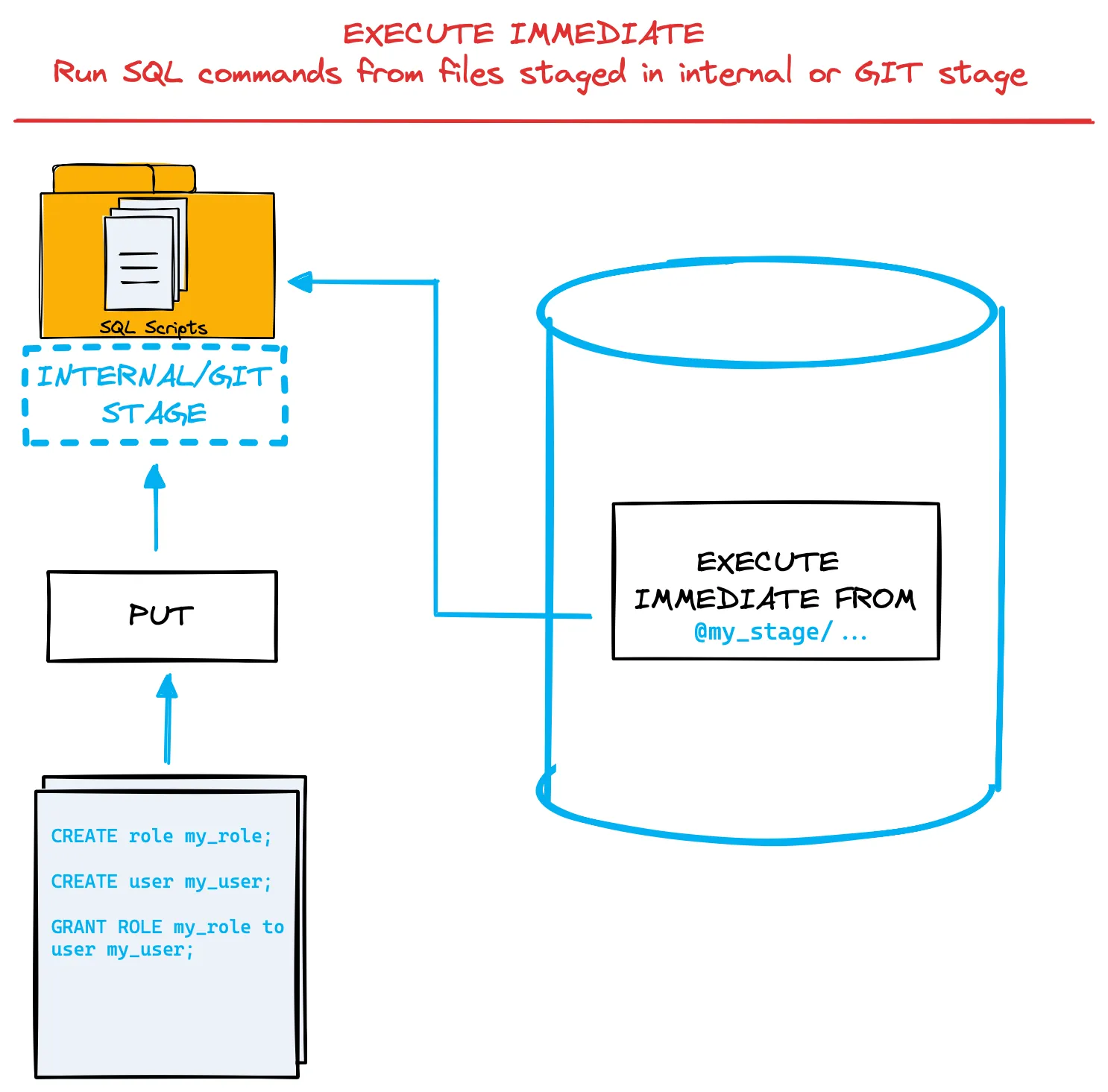

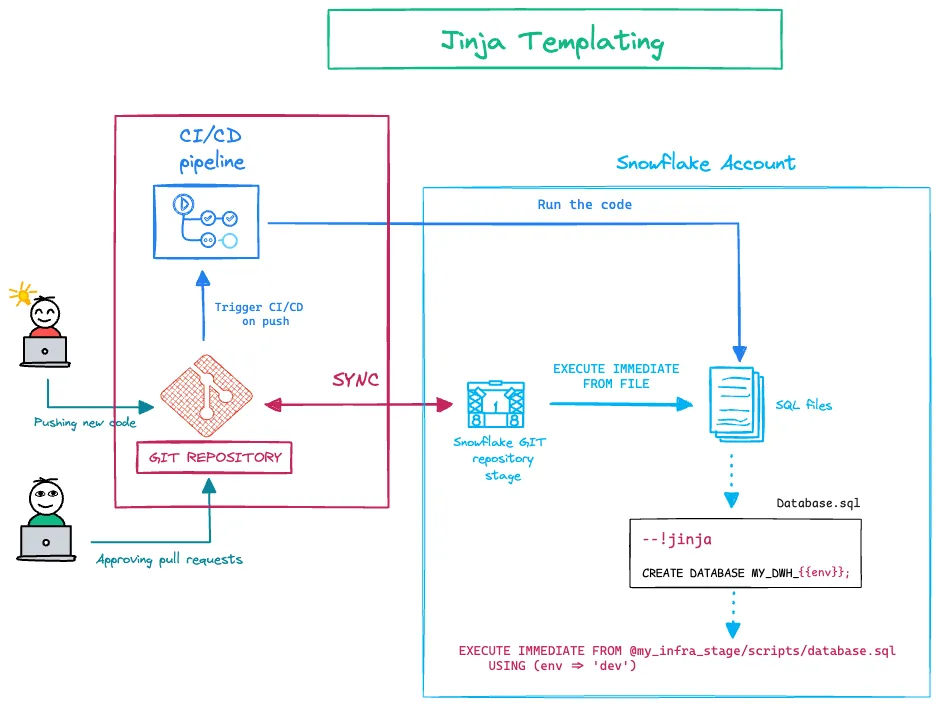

EXECUTE IMMEDIATE FROM

Another powerful DevOps feature in Snowflake is EXECUTE IMMEDIATE FROM. This allows you to run SQL commands directly from files stored in an internal stage or GitHub repository. These files can contain standard SQL statements or Snowflake Scripting blocks.

This functionality is exactly what we need for deploying objects into Snowflake. Instead of relying on complex import mechanisms, you can store your DDL scripts with object definitions and execute them directly from the stage—simple and efficient. A recent enhancement to this feature is support for Jinja templating. With Jinja templates, you can make your SQL scripts and DDL definitions far more dynamic by incorporating:

- Variables

- Loops

- Conditions

- Macros, and more

For example, environment variables let you parameterize deployments and dynamically choose the target environment. Loops allow you to iterate over users, warehouses, or any other defined objects, making it easier to create and maintain them. You can even pull in content from other files in stages inside your templates. This opens up even more possibilities.

EXECUTE IMMEDIATE adds a lot of value when you're setting up automated deployments. So, what do we need next? We definitely want to have our DDL scripts under version control. You never know what might happen—you need the ability to revert changes, track what was modified, and see who made each change. Version control provides transparency, accountability, and safety for your deployments.

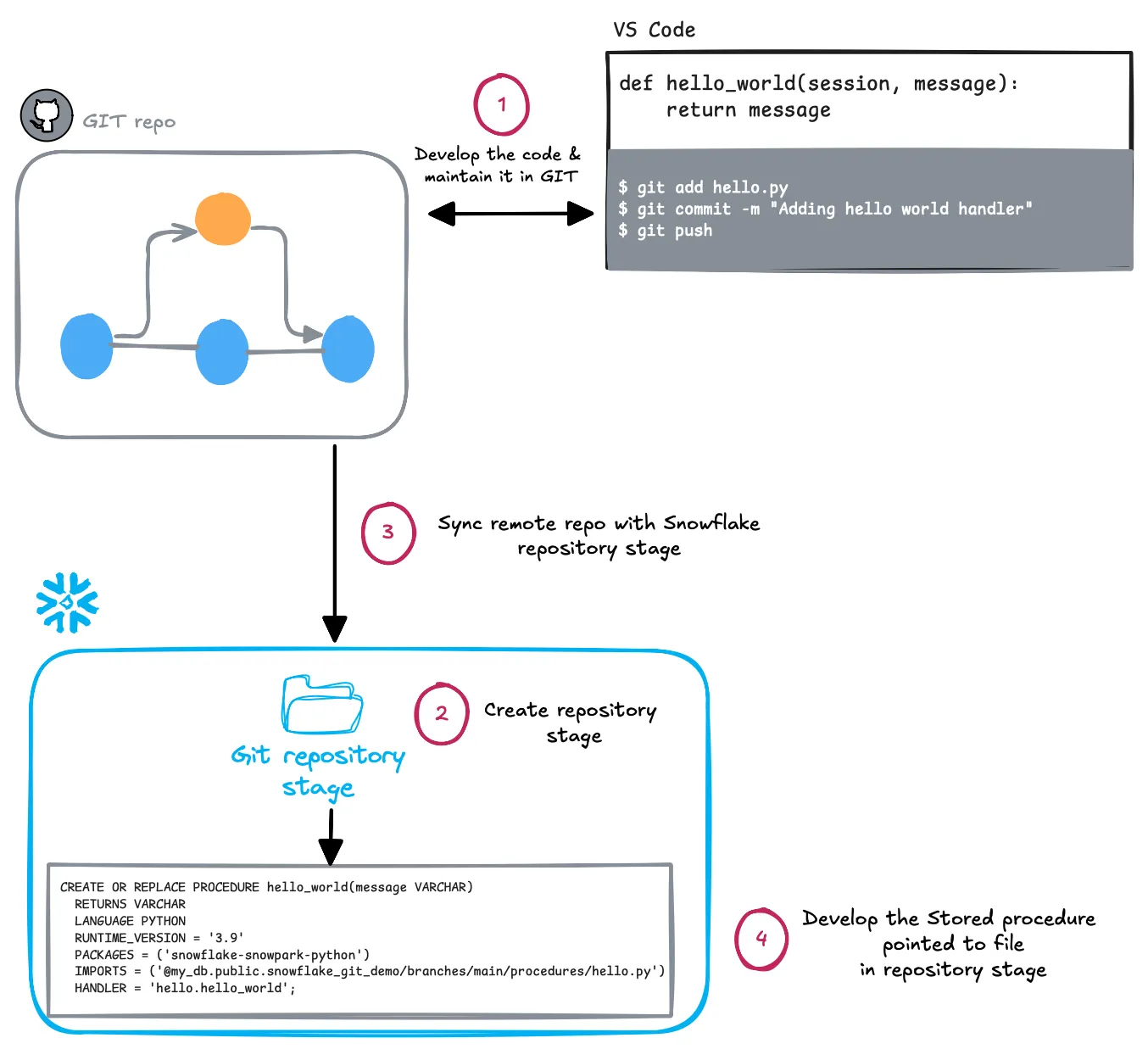

GIT integration

Snowflake also offers native Git integration, allowing you to store your code in a remote repository and synchronize it with an internal stage. This makes all your files available for execution directly within Snowflake. While Git integration is currently read-only (with a few exceptions), it fills another gap in building a complete and automated deployment pipeline.

Let’s try to use GIT integration with EXECUTE IMMEDIATE to run user creation script from the repository:

1: Create users.sql file

CREATE USER joe;

GRANT ROLE developer TO USER joe;

2: Commit changes to repository:

git add users.sql

git commit -m "Adding new user"

git push

3: Fetch the updates from remote repository into Snowflake repository stage

ALTER GIT REPOSITORY snowflake_git_demo FETCH;

4: Execute the code from the file in Snowflake

EXECUTE IMMEDIATE FROM @snowflake_git_demo/branches/main/sql/users.sql;

We’ve covered this in detail in a separate blog post, which provides a comprehensive overview of Snowflake’s Git integration capabilities, along with a step-by-step guide. You’ll learn:

- What Snowflake’s Git Integration is

- Why it’s such an exciting feature

- How to use it for easy deployment of a stored procedure handler

- What operations are supported within Snowflake

- Current limitations

- Various use cases for Git integration

Now that we know how to define our infrastructure as code, run it, and version it, what’s still missing? The final piece is orchestration — and it has two key perspectives:

1. The Snowflake perspective – how to connect, select the right files, and execute the queries 2. The process perspective – how to wrap everything into a standalone pipeline that can be triggered by different events

Let’s focus on the Snowflake perspective for now. We’ll cover the process side in the next part, where we’ll build a complete pipeline from scratch.

SnowSQL

That is original Snowflake CLI client that allows you to do many of the tasks available in the UI - running queries, managing the objects, importing/exporting data, etc. It supports all major operating systems and offers various authentication methods. SnowSQL has been a go-to tool for many years, especially among admins, but it's time to move on — Snowflake CLI is the new standard and should be the preferred choice going forward as there might be functionalities which won’t be added into SnowSQL.

Snowflake CLI

This is an open-source project, initially developed by the community but now fully maintained by Snowflake. The CLI primarily serves as a developer interface for managing different types of code in Snowflake — including stored procedures, functions, native and Streamlit apps, Snowpark, Snowpark Container Services, Git repositories, and more. It supports a wide range of use cases aimed at simplifying code and infrastructure management within Snowflake. From a DevOps perspective, both CLI tools allow you to run queries in Snowflake, so the choice often comes down to personal preference. In our case, we'll use the CLI client to connect to Snowflake and perform the following actions:

- Sync the Git stage with the remote repository

- Deploy the code by running EXECUTE IMMEDIATE commands to create infrastructure objects such as roles, warehouses, databases, and more

Infrastructure as Code: Alternatives to pure Snowflake SQL

We’ve covered the essential building blocks that Snowflake provides in the DevOps space. By combining these features, you can build robust, automated pipelines to manage your Snowflake infrastructure. However, Snowflake's native capabilities aren't the only option — there are many other powerful alternatives. Let’s take a look at the most popular ones to provide a more complete picture.

Terraform

Terraform is arguably the most widely adopted tool for this purpose. It allows you to define your Snowflake objects as code and manage them in the same way as any other cloud infrastructure. If you're already familiar with Infrastructure as Code (IaC), this will feel like a natural extension. For many teams, Terraform is the first choice — especially if you're already using it to manage your cloud infrastructure. Extending its use to Snowflake just makes sense. The official Snowflake provider has matured significantly over the years, and it recently reached General Availability. Support for new objects is continuously being added. If you'd like to dive deeper into using Terraform with Snowflake, check out our dedicated blog post on the topic.

Permifrost

Permifrost is an open-source tool specifically designed to manage permissions in Snowflake using code. This Python-based tool allows you to define roles, grants, and ownership in YAML files. Managing privileges through code offers a more scalable and controlled approach than manually configuring them in the UI or writing raw SQL grants. Permifrost uses a declarative model for managing permissions in Snowflake. However, it is limited in scope, as it only handles permissions and roles. It does not manage object creation or deletion, making it less comprehensive compared to other alternatives.

Titan

Titan is another open-source tool for deploying Snowflake infrastructure as code. Written in Python, it aims to address some of the drawbacks of Terraform. For instance, it allows dynamic switching between roles (e.g., SECURITYADMIN vs SYSADMIN), uses Python-based definitions (so there's no need to learn a new language or syntax), and supports SQL. Additionally, it doesn't rely on state files like Terraform.

However, Titan uses names as unique identifiers, so renaming a resource will result in the creation of a new one. Since Titan is still under active development, support for some resources may be limited.

Plus a quick note — the project is primarily maintained by one person who’s currently focused on other business. So keep that in mind when validating the options.

Schema change

Last one might be the oldest one. It's a Python-based, imperative tool, meaning you deploy changes as a series of modifications (ALTER statements) to the original objects. You must maintain versioned scripts and track the history of how changes have been applied. Schema Change then applies only the new changes compared to the history. For example, if you create a table with Schema Change and later need to add/remove a column or make other modifications, you’ll need to write an ALTER script with a new version number.

Schema Change works with two types of scripts: versioned and repeatable. Repeatable scripts are deployed every time the tool runs (if the change is detected). This approach is useful for views, where recreating the view is not considered a destructive change. Another key concept in Schema Change is the history of applied scripts, which is stored in a database table.

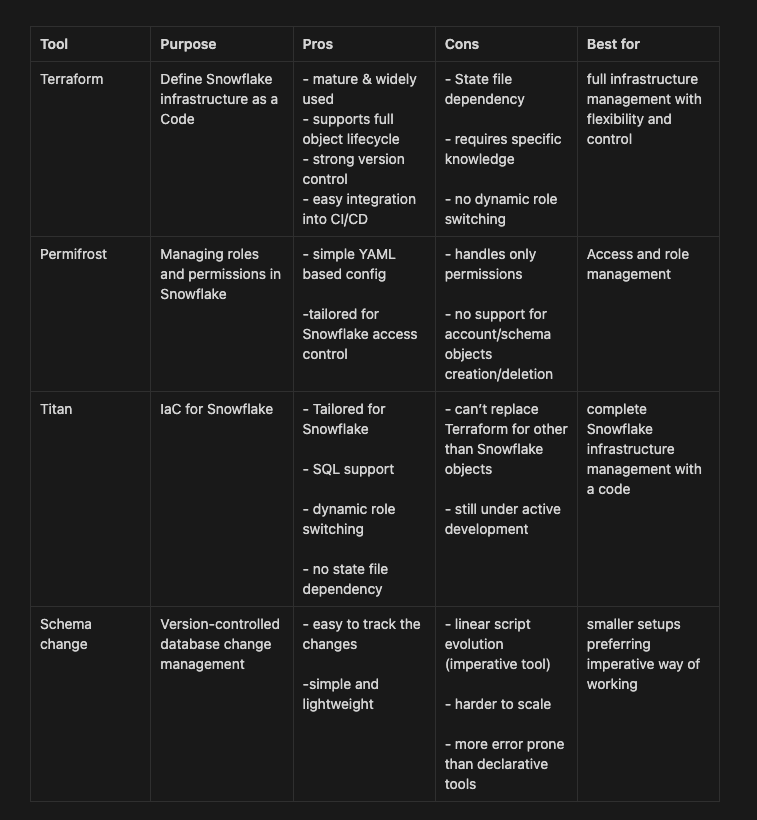

Let’s try to summarize pros and cons of these alternative tools in table.

As the table shows, the right tool selection may depend on various factors, such as the aspects you want to automate (infrastructure, permissions, or just SQL scripts), your current knowledge, or platform requirements. Each tool can be a good fit for different scenarios.

Wrap up

To wrap up, we’ve covered Snowflake's native features for DevOps, along with other alternatives available in the market, providing a comprehensive overview of DevOps activities in Snowflake. In the next post, we’ll dive into a step-by-step implementation guide!

Tomas is a longstanding Snowflake Data SuperHero and general Snowflake subject matter expert. His extensive experience in the data world spans over a decade, during which he has served as a Snowflake data engineer, architect, and admin on various projects across diverse industries and technologies. Tomas is a core community member, actively sharing his expertise and inspiring others. He's also an O'Reilly instructor, leading live online training sessions.

Get up and running with SELECT in 15 minutes.

Snowflake optimization & cost management platform

Gain visibility into Snowflake usage, optimize performance and automate savings with the click of a button.