The Need for Speed in Data Science

Python's versatility has made it the language of choice for data science. However, as datasets grow exponentially in size and complexity, the performance limitations of Python's built-in data structures (like lists, dictionaries, and tuples) become apparent. While excellent for general-purpose programming, they are not optimized for large-scale numerical operations. Python lists, for instance, store heterogeneous data, meaning each element can be of a different type, requiring individual memory allocations and type checking during operations. This overhead significantly slows down computations on vast amounts of data.

To overcome these bottlenecks, the data science community embraced "vectorized operations." This paradigm shifts from explicit looping over individual elements to applying operations on entire arrays or columns of data at once. This approach leverages highly optimized, often compiled, underlying implementations, leading to dramatic performance improvements.

NumPy Arrays: The Foundation of Numerical Computing

At the heart of high-performance numerical computing in Python lies NumPy, and its fundamental data structure, the ndarray (N-dimensional array). Unlike Python lists, NumPy arrays store homogeneous data (all elements are of the same type) contiguously in memory. This contiguous storage is crucial because it allows CPUs to perform operations on chunks of data efficiently, leveraging modern processor architectures and SIMD (Single Instruction, Multiple Data) instructions.

Consider a simple arithmetic operation on a large dataset:

import numpy as np

import time

# Generate a large dataset

data_size = 10**7

python_list = list(range(data_size))

numpy_array = np.arange(data_size)

# Python list benchmark

start_time = time.time()

result_list = [x * 2 for x in python_list]

end_time = time.time()

print(f"Python list multiplication time: {end_time - start_time:.4f} seconds")

# NumPy array benchmark

start_time = time.time()

result_numpy = numpy_array * 2

end_time = time.time()

print(f"NumPy array multiplication time: {end_time - start_time:.4f} seconds")

You'll observe that the NumPy operation completes significantly faster. This efficiency is why NumPy arrays are the bedrock for almost all numerical and scientific computing libraries in Python. For more details on NumPy, refer to the NumPy Documentation.

Pandas DataFrames and Series: Structured Data Powerhouse

Building directly on NumPy arrays, Pandas provides powerful, high-level data structures for structured data: the DataFrame and Series. A Pandas Series can be thought of as a single column of data, essentially an enhanced NumPy array with an associated label (index). A DataFrame, then, is a collection of Series objects, sharing a common index, forming a tabular data structure with labeled rows and columns.

While Pandas DataFrames don't implement columnar storage in the same strict sense as some other systems (like Apache Arrow, which we'll discuss next), they conceptually operate very efficiently on columns. Each column in a DataFrame is typically a NumPy array, allowing Pandas to leverage NumPy's vectorized operations for common data manipulation tasks like filtering, aggregation, and transformations. This design makes Pandas incredibly efficient for data cleaning, transformation, and analysis.

Common operations in Pandas, such as df.groupby(), df.merge(), or df.loc[], are highly optimized under the hood, making complex data workflows surprisingly fast. The design allows for intuitive and readable code while maintaining strong performance for most data science tasks. Dive deeper into its capabilities with the Pandas Documentation.

Apache Arrow: The Game Changer for Interoperability and Performance

As data science workflows became more complex, involving multiple languages and systems (e.g., Python for analysis, Spark for big data processing, R for statistics), the need for an efficient and standardized in-memory data format emerged. This led to Apache Arrow.

Apache Arrow is not a data structure library in the traditional sense, but rather a language-agnostic, columnar memory format. It defines a standard way to represent tabular data in memory, enabling zero-copy data exchange between different systems and programming languages (Python, R, Java, C++, etc.). This eliminates the costly serialization/deserialization overhead that typically occurs when data moves between different environments.

Libraries like Pandas (especially with its newer "Arrow backend" option) and Polars leverage Arrow to significantly improve performance and reduce memory footprint, particularly when dealing with mixed-type data or large strings. For instance, converting a Pandas DataFrame to an Arrow Table is efficient because both are designed to work with columnar data principles.

import pandas as pd

import pyarrow as pa

import numpy as np

# Create a Pandas DataFrame

pdf = pd.DataFrame({

'col1': np.random.randint(0, 100, 10),

'col2': np.random.rand(10),

'col3': ['text_' + str(i) for i in range(10)]

})

print("Pandas DataFrame:")

print(pdf)

# Convert Pandas DataFrame to an Arrow Table

arrow_table = pa.Table.from_pandas(pdf)

print("\nApache Arrow Table:")

print(arrow_table)

# Convert Arrow Table back to Pandas DataFrame

pdf_from_arrow = arrow_table.to_pandas()

print("\nPandas DataFrame from Arrow Table:")

print(pdf_from_arrow)

This seamless conversion highlights Arrow's role in facilitating high-performance data pipelines across disparate tools. Learn more about its capabilities at the Apache Arrow Documentation.

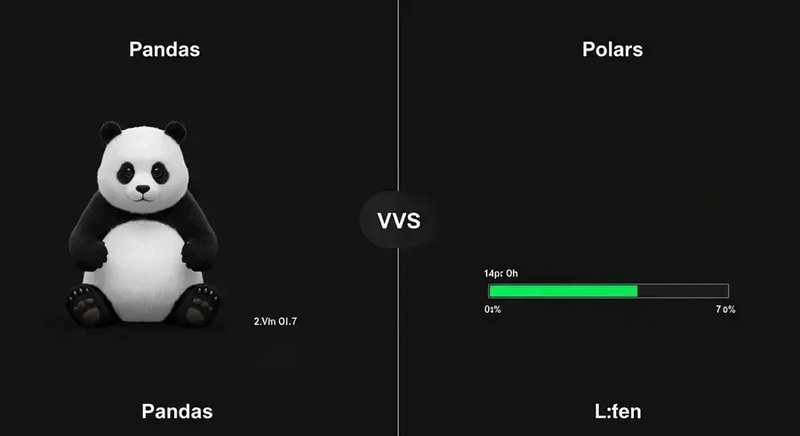

Polars: The Blazing-Fast DataFrame Library (Rust-powered)

Polars is a relatively new, yet incredibly powerful, DataFrame library that has gained significant traction for its blazing speed and memory efficiency. Written in Rust, it leverages the performance benefits of a compiled language while providing a Pythonic API. Polars is built natively on Apache Arrow, which is a key factor in its high performance.

Key features of Polars include:

- Lazy Evaluation: Operations are not executed immediately but are instead built into a query plan, allowing Polars to optimize the execution order and reduce redundant computations.

- Expression-based API: Polars encourages an expressive, functional style of data manipulation, which can lead to more readable and performant code.

- Native Apache Arrow Integration: By using Arrow as its in-memory format, Polars benefits from efficient data storage and zero-copy operations.

Let's look at a comparative benchmark between Pandas and Polars for a moderately complex data transformation:

import pandas as pd

import polars as pl

import numpy as np

import time

# Generate a large dataset

data_size = 10**6

data = {

'category': np.random.choice(['A', 'B', 'C', 'D'], size=data_size),

'value': np.random.rand(data_size) * 100,

'group_id': np.random.randint(0, 100, size=data_size)

}

# Pandas benchmark

pdf = pd.DataFrame(data)

start_time = time.time()

pandas_result = pdf.groupby('category')['value'].mean()

end_time = time.time()

print(f"Pandas execution time: {end_time - start_time:.4f} seconds")

# Polars benchmark

pldf = pl.DataFrame(data)

start_time = time.time()

polars_result = pldf.group_by('category').agg(pl.col('value').mean())

end_time = time.time()

print(f"Polars execution time: {end_time - start_time:.4f} seconds")

# Print results (optional, for verification)

# print("\nPandas Result:\n", pandas_result)

# print("\nPolars Result:\n", polars_result)

The performance difference, especially on larger datasets, can be substantial, making Polars an attractive option for data scientists dealing with performance-critical applications. Explore its capabilities further in the Polars Documentation.

Narwhals: Unifying DataFrame APIs (Future Outlook)

The proliferation of high-performance DataFrame libraries like Pandas and Polars, while beneficial for performance, can introduce fragmentation in the Python data ecosystem. This is where Narwhals comes in. Narwhals is an emerging project that aims to provide a unified API across different DataFrame libraries. Its goal is to allow developers to write code that is agnostic to the underlying DataFrame implementation, making it easier to switch between backends (e.g., Pandas, Polars, Modin, cuDF) based on specific performance needs or deployment environments without rewriting significant portions of the codebase.

By offering a common interface, Narwhals simplifies the development of libraries and applications that need to be compatible with various DataFrame frameworks, fostering greater interoperability and reducing the learning curve for users transitioning between them. You can follow its progress on the Narwhals GitHub repository.

Conclusion: Choosing the Right Tool for the Job

The evolution of data structures in Python, from fundamental built-in types to highly optimized libraries like NumPy, Pandas, Apache Arrow, and Polars, reflects the increasing demand for efficient data processing in modern data science. Each of these tools offers distinct advantages and caters to specific use cases:

- Standard Python Data Structures (lists, dictionaries): Ideal for general-purpose programming, small datasets, and when data heterogeneity is a requirement. They offer flexibility but lack the performance for large-scale numerical computations.

- NumPy Arrays: The fundamental building block for numerical computing. Essential for any task involving large, homogeneous numerical arrays where vectorized operations are key to performance.

- Pandas DataFrames and Series: Your go-to for structured data manipulation, cleaning, and analysis. They provide a rich, intuitive API built on top of NumPy's efficiency, suitable for most medium to large datasets.

- Apache Arrow: Crucial for interoperability and efficient data exchange between different systems and languages, especially in big data ecosystems. It underpins many modern high-performance libraries.

- Polars: An excellent choice when raw speed and memory efficiency are paramount, particularly for very large datasets or complex transformations. Its Rust backend and lazy evaluation offer significant performance gains over traditional Pandas for certain workloads.

Understanding these specialized data structures and their underlying mechanisms is vital for any Python developer looking to optimize their data processing workflows and stay at the forefront of the data science ecosystem. The right tool, applied judiciously, can unlock significant performance improvements and enable the tackling of increasingly complex data challenges. For a deeper dive into the foundational concepts, explore more about data structures explained in Python.

Top comments (0)