The pervasive integration of Artificial Intelligence (AI) and Machine Learning (ML) into nearly every facet of modern life has brought unprecedented innovation and efficiency. However, this widespread adoption also exposes critical vulnerabilities, particularly concerning the security and privacy of the underlying data and models. AI/ML workloads, by their very nature, handle vast amounts of sensitive information—from proprietary business data and intellectual property embedded in models to highly personal user data used for training and inference.

Common attack vectors on AI systems are diverse and sophisticated. During the training phase, data exfiltration is a significant concern. Malicious actors could attempt to steal or tamper with the sensitive datasets used to train models, leading to compromised privacy, competitive disadvantages, or even the injection of biased or harmful data. Model theft is another critical vulnerability; proprietary AI models, representing years of research and significant investment, can be stolen and reverse-engineered, leading to intellectual property loss. Furthermore, once deployed, AI models are susceptible to adversarial attacks during inference, where subtly manipulated input data can cause a model to misclassify or behave unexpectedly, potentially leading to incorrect decisions in critical applications like autonomous vehicles or medical diagnostics. The traditional cloud computing model, where data is processed in environments accessible to the underlying infrastructure provider, hypervisor, and other tenants, exacerbates these risks, as it introduces additional points of potential compromise.

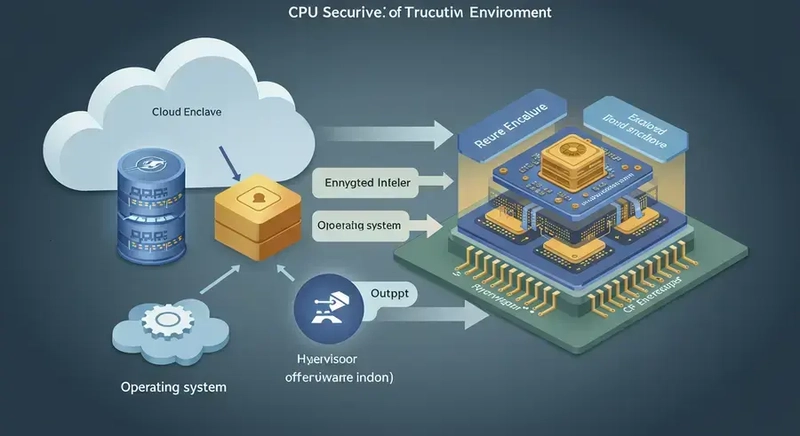

Confidential Computing emerges as a powerful solution to these pressing security challenges. At its core, confidential computing utilizes hardware-based Trusted Execution Environments (TEEs), often referred to as secure enclaves, to create isolated environments. Within a TEE, AI models and data can be processed with strong assurances that they are protected from unauthorized access and tampering, even from the cloud provider, hypervisor, or other virtual machines on the same physical hardware. This isolation is achieved through hardware-level encryption of data in use, memory isolation, and cryptographic attestation, which allows users to verify that their workload is running in a legitimate TEE without alteration. This means that sensitive data remains encrypted in memory until it is processed inside the TEE, and even then, it is only exposed to authorized code within that secure boundary. This fundamental shift in security posture allows organizations to confidently migrate their most sensitive AI workloads to untrusted cloud environments, significantly reducing the attack surface and enhancing data privacy.

Key Use Cases

Confidential computing unlocks a new era of secure AI applications, addressing long-standing privacy and security concerns across various industries.

Secure Federated Learning: Federated learning allows multiple parties to collaboratively train AI models on their private datasets without sharing the raw data. This is particularly vital in sectors like healthcare or finance, where data privacy regulations are stringent. Confidential computing enhances federated learning by providing a secure enclave for the aggregation of model updates or the training of a global model. Each participant's model updates can be processed within a TEE, ensuring that individual data remains private while contributing to a more robust, collectively trained model. This mitigates the risk of data leakage or inference attacks on individual datasets during the collaborative training process. For instance, hospitals can contribute to a shared diagnostic AI model without ever exposing sensitive patient records.

Protecting Proprietary Models: Trained AI models are often the intellectual property of organizations, representing significant investment and competitive advantage. Deploying these models for inference in cloud environments carries the risk of model theft or reverse engineering. Confidential computing safeguards this intellectual property by ensuring that the model weights and logic remain encrypted and are only decrypted and processed within the secure confines of a TEE. This protection extends to the entire lifecycle of the model, from deployment to execution, making it significantly harder for unauthorized parties, including the cloud provider, to access or copy the model.

Confidential Inference: Ensuring the privacy of input data during real-time AI predictions is crucial for applications dealing with sensitive user information, such as financial transactions, medical diagnoses, or personal recommendations. With confidential inference, input data remains encrypted as it enters the TEE, is decrypted and processed by the AI model within the secure enclave, and the results are re-encrypted before being sent back. This guarantees that the sensitive input data is never exposed in plaintext to the underlying infrastructure, providing a strong privacy guarantee for users. Google Cloud, for example, offers Confidential VMs and GKE Nodes with NVIDIA H100 GPUs, extending hardware-based data protection from the CPU to GPUs, enabling confidential AI/ML workloads. They also offer Confidential Vertex AI Workbench for enhanced data privacy for machine learning developers, as highlighted in their blog on how confidential computing lays the foundation for trusted AI.

Data Clean Rooms for AI: Data clean rooms provide a secure environment for combining sensitive datasets from different sources for joint AI analytics, without any single party gaining access to the others' raw data. Confidential computing provides the ideal foundation for these clean rooms, creating a secure enclave where data from multiple contributors can be brought together, decrypted within the TEE, and used for AI training or analysis. The results of the analysis can then be shared with the authorized parties, while the individual raw datasets remain private and protected from all other participants and the cloud provider. This enables collaborative insights and innovation while adhering to strict privacy regulations. Examples include anti-money laundering efforts or drug development, where multiple entities can contribute data for analysis without exposing sensitive information, as detailed in Microsoft Azure's confidential computing use cases.

Technical Deep Dive (Conceptual Code Example)

To illustrate how an AI inference request might flow through a confidential environment, consider the following conceptual Python example. This code simulates the core principle of a Trusted Execution Environment (TEE) where sensitive data and model weights are protected from the outside world.

# Conceptual example: AI inference in a confidential computing environment

class ConfidentialAIEnclave:

def __init__(self, model_weights):

# In a real TEE, model_weights would be loaded securely and never exposed

self._model = self._load_secure_model(model_weights)

def _load_secure_model(self, weights):

# Simulate secure loading within the TEE

print("Model loaded securely within the TEE.")

return f"SecureModel({weights})" # Represents a loaded AI model

def process_data_securely(self, encrypted_input_data):

print(f"Received encrypted data: {encrypted_input_data}")

# In a real TEE, data would be decrypted *inside* the TEE

decrypted_data = f"Decrypted({encrypted_input_data})"

print(f"Data decrypted within TEE: {decrypted_data}")

# Perform inference using the securely loaded model

inference_result = f"InferenceResult({self._model}, {decrypted_data})"

print(f"Inference performed within TEE: {inference_result}")

# Encrypt the result before sending it out

encrypted_result = f"Encrypted({inference_result})"

print(f"Result encrypted within TEE: {encrypted_result}")

return encrypted_result

# --- Outside the confidential environment ---

# This part represents the untrusted host or client

print("--- Simulating interaction with a Confidential AI Enclave ---")

# Imagine your sensitive AI model weights

my_ai_model_weights = "SuperSecretModelWeights123"

enclave = ConfidentialAIEnclave(my_ai_model_weights)

# Your sensitive input data (e.g., patient records, financial data)

sensitive_user_data = "EncryptedPatientDataXYZ"

# Send encrypted data to the confidential enclave for processing

secure_output = enclave.process_data_securely(sensitive_user_data)

print(f"\nReceived encrypted output from enclave: {secure_output}")

print("The raw data and model weights were never exposed outside the simulated TEE.")

In this conceptual example, the ConfidentialAIEnclave class simulates the behavior of a TEE. When the ConfidentialAIEnclave is initialized, the _load_secure_model method represents the secure loading of model weights within the TEE, ensuring they are never exposed outside. Similarly, the process_data_securely method demonstrates how encrypted input data is received, decrypted inside the simulated TEE, processed using the securely loaded model, and then the result is re-encrypted before being returned. The key takeaway is that both the sensitive input data and the proprietary model weights remain protected throughout the entire inference process, never being exposed in plaintext to the untrusted host or client. This high-level illustration underscores the isolation and protection that confidential computing provides for AI workloads.

Challenges and Future Outlook

While confidential computing offers a transformative approach to AI security, it is not without its challenges. One current limitation is the overhead associated with TEEs, which can sometimes impact performance compared to traditional computing environments. The complexity of developing and deploying applications within TEEs also presents a learning curve for developers. Furthermore, the ecosystem for confidential computing, while rapidly growing, is still maturing, requiring continued collaboration among hardware manufacturers, cloud providers, and software developers. The Confidential Computing Consortium (CCC), formed under the auspices of The Linux Foundation, is actively working to define industry-wide standards and promote the development of open-source tools to address these challenges. More insights into the ongoing development and adoption of confidential computing can be found by exploring confidential computing.

Despite these challenges, the future potential of confidential AI is immense and exciting. As hardware advancements continue to improve TEE performance and ease of use, and as the software ecosystem matures, confidential computing will become an increasingly standard component of secure AI deployments. This will enable organizations to leverage the full power of AI, even with the most sensitive data, fostering innovation in regulated industries and enabling new forms of secure collaboration. From enhanced privacy in healthcare AI to secure financial analytics and the protection of critical national infrastructure, confidential computing is laying a robust foundation for a truly trusted and secure AI future. Real-world stories and use cases from the Confidential Computing Consortium, such as those highlighted by Intel, demonstrate the tangible benefits already being realized in various industries, from healthcare to finance, showcasing how organizations are achieving enhanced security and compliance without compromising performance. As IBM notes, confidential computing helps eliminate the largest barrier to moving sensitive or highly regulated data sets and application workloads to the public cloud by protecting data even while in use, extending cloud benefits to sensitive workloads.

Top comments (0)