In today's AI landscape, enabling a Local LLM (like Llama3 via Ollama) to understand user intent and dynamically call Python functions is a critical capability.

The foundation of this interaction is Model Context Protocol (MCP).

In this blog, I'll show you a simple working example of an MCP Client and MCP Server communicating locally using pure stdio — no networking needed!

🔹 How It Works

✅ MCP Server

The MCP Server acts as a toolbox:

- - Exposes Python functions (

add,multiply, etc.) - - Waits silently for requests to execute tools

- It executes the function and returns the result.

Example:

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("calculator")

@mcp.tool()

def add(a: int, b: int) -> int:

return a + b

@mcp.tool()

def multiply(a: int, b: int) -> int:

return a * b

if __name__ == "__main__":

mcp.run(transport='stdio')

The Server registers tools and waits for client requests!

✅ MCP Client

The MCP Client is the messenger:

- Lists available tools

- Sends tools to the LLM

- Forwards tool call requests to the Server

- Collects and returns results

The Client is like a translator and dispatcher — handling everything between the model and the tools.

Example:

import asyncio

from mcp import ClientSession

from mcp.client.stdio import stdio_client

from mcp.client.server_parameters import StdioServerParameters

async def main():

server_params = StdioServerParameters(command="python", args=["math_server.py"])

stdio = await stdio_client(server_params)

async with ClientSession(*stdio) as session:

await session.initialize()

tools = await session.list_tools()

print("Available tools:", [tool.name for tool in tools.tools])

result = await session.call_tool("add", {"a": 5, "b": 8})

print("Result of add(5,8):", result.content[0].text)

asyncio.run(main())

The Client manages the conversation and tool execution.

🔹 Communication: stdio

Instead of HTTP or network APIs, the Client and Server communicate directly over stdin/stdout:

- Client ➡️ Server: Requests like

list_toolsandcall_tool - Server ➡️ Client: Replies with tools and results

✅ Fast, lightweight, private communication

✅ Perfect for local LLM setups

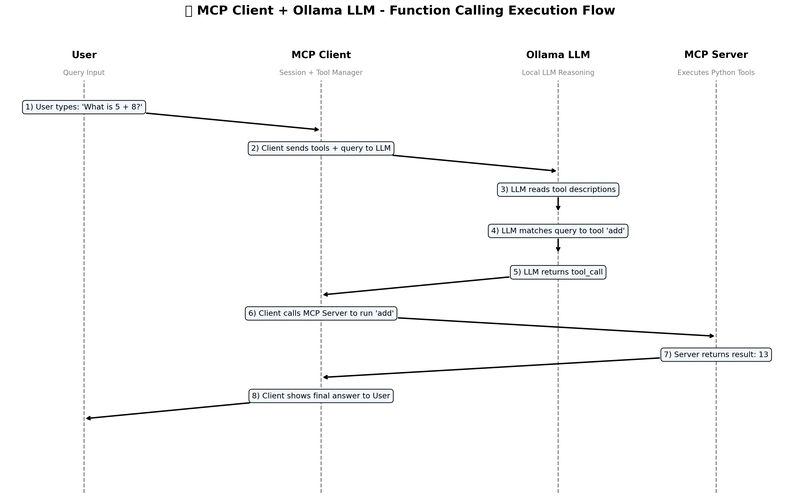

📈 Visual Flow

Why This Pattern Matters

- No API servers, no networking complexity

- Fast, local, secure communication

- Easily extendable: add new tools, no need to rebuild the architecture

- Foundation for building smart autonomous agents with local LLMs

You can easily extend this pattern to:

- Add more Python tools

- Connect Streamlit or FastAPI frontends

- Dockerize the full stack

📚 Full GitHub Project

👉 https://github.com/rajeevchandra/mcp-client-server-example

✅ MCP Server & MCP Client Code

✅ Local LLM setup with Ollama

✅ Full README + Diagrams

🚀 Final Thought

"Smarter agents don’t know everything — they know how to use the right tool at the right time."

Would love to hear your thoughts if you check it out or build something on top of it! 🚀

Top comments (0)