Empowering developers to monitor, debug, and optimize Large Language Model (LLM) applications with ease.

Why I Built OpenLLM Monitor

As LLMs like GPT-4, Llama, and Mistral become the engines behind more products and research, managing and debugging these complex systems has become a new challenge. Existing monitoring tools are often built for traditional applications — they don't offer the LLM-specific insights developers crave.

OpenLLM Monitor was born from my own pain points:

- Tracking LLM requests and responses for debugging

- Detecting hallucinations and prompt drift

- Understanding performance bottlenecks and latency

- Auditing and improving user experience with LLMs

What is OpenLLM Monitor?

OpenLLM Monitor is an open source, plug-and-play toolkit that helps you:

- Monitor every prompt, completion, error, and latency metric in real-time

- Debug user sessions and LLM behavior with rich, contextual logs

- Analyze trends, usage, and anomalies to optimize your LLM apps

Whether you're an indie hacker, a startup, or an enterprise ML team, OpenLLM Monitor makes observability for LLMs as frictionless as possible.

Key Features

- Plug-and-Play SDK – Integrate into any Python, Node, or REST-based LLM pipeline in minutes

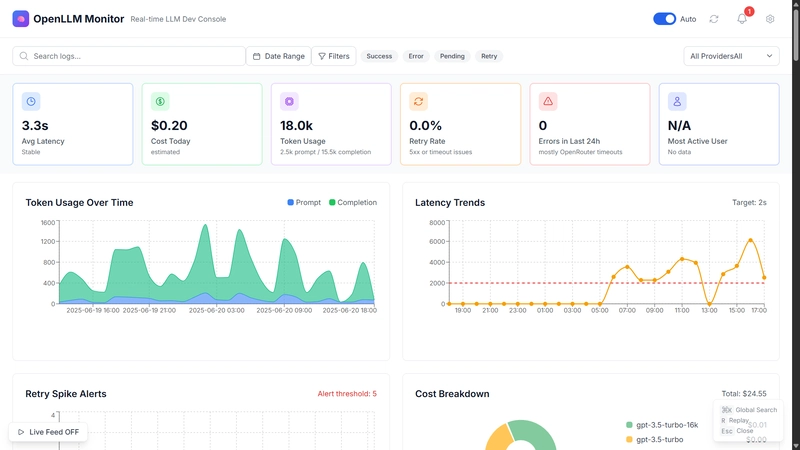

- Real-Time Dashboard – Visualize prompt/response flows, error rates, and KPIs

- Traceable Sessions – Drill down from a user session to individual API calls

- Anomaly Detection – Get alerted for outlier responses, hallucinations, and failures

- Open Source – Free, MIT-licensed, and extensible. Your data, your rules.

📊 Dashboard Screenshots

Real-time monitoring of all your LLM requests with comprehensive analytics

Detailed logging of all LLM API calls with filtering and search

Detailed logging of all LLM API calls with filtering and search

Test and compare prompts across different providers and models

Why Open Source?

I believe the future of AI should be transparent, trustworthy, and collaborative. By making OpenLLM Monitor open source, I invite you to:

- Contribute: Suggest features, file issues, or submit PRs!

- Self-host: Deploy it on your own infra — no vendor lock-in

- Shape the roadmap: Let's build what the community needs most

Get Started

GitHub: https://github.com/prajeesh-chavan/OpenLLM-Monitor

Let’s Build Together!

If you're building with LLMs, try out OpenLLM Monitor and let me know what you think. I'm eager for your feedback, feature requests, and collaborations.

Star ⭐ the repo, share with your network, and help spread the word!

Follow me on Medium and GitHub for more AI dev tools and open source projects.

Top comments (0)