MCP (Model Context Protocol) has gained popularity due to its ease of use, standardization, and efficiency in integrating AI models with external systems. MCP is particularly useful in AI-driven automation, agent-based systems, and LLM-powered applications, making it a go-to choice for developers looking to enhance AI interactions.

In this post, we'll take an in-depth look at running an AWS Lab MCP server inside a Docker container and leveraging a large language model (LLM)—DeepSeek in this case—to send prompts for executing actions efficiently.

If you're already familiar with AWS Cloud and want to explore how MCP operates in real time to execute specific actions, AWS Labs MCP is a great starting point for hands-on experimentation.

I will cover more details on MCP architecture and how AWS MCP server interacts with LLM in separate post. I will cover here how you can get the things working really quick and see MCP in action.

Steps

1. Clone the AWS MCP git repo

git clone https://github.com/awslabs/mcp.git

Navigate to the MCP server you want to run as docker container. We will try to generate some cool AWS diagram in this case. So I will navigate to aws-diagram-mcp-server to see this in action

cd aws-mcp/mcp/src/aws-diagram-mcp-server/

2. Build the Docker images

Run the docker file to create an docker image out of it

docker build -t awslabs/aws-diagram-mcp-server .

Once the image is built successfully we will use this MCP server now to connect from a LLM agent. I will use Cline extension in this case. I found this super useful and ease of use in terms of configuration and running the MCP server.

3. LLM API Configuration from VSCode

-

Install Cline extension in VScode

Add the LLM API

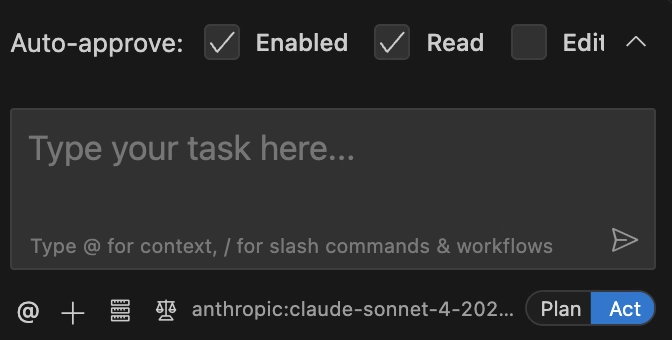

Open Cline extension and configure the LLM (API you will use to connect to MCP). By default Cline uses Anthropic Claude sonnet-4 LLM and you can change this from the API Provider option.

In my case I have selected DeepSeek and this is my personal favourite. You need to include the API key and click on done. Refer the below screenshots to change the API provider and the adding the key.

| LLM API provider | Update API Key |

|---|---|

|

|

Once the LLM API configuration is successful, you can test sending a hello from the LLM to see the response from the model.

4. Add MCP server in Cline

Once the LLM API configuration is successful, we can start adding the MCP server to Cline. Select the MCP server icon below and click on manage MCP server.

Click on Configure MCP Servers and that will open a file in VSCode cline_mcp_settings.json

Add the below for the AWS diagram generator MCP server. You can get the docker command MCP json for every MCP server in the respective MCP server documentation.

"awslabs.aws-diagram-mcp-server": {

"command": "docker",

"args": [

"run",

"--rm",

"--interactive",

"--env",

"FASTMCP_LOG_LEVEL=ERROR",

"awslabs/aws-diagram-mcp-server"

],

"env": {},

"disabled": false,

"autoApprove": []

}

The full cline_mcp_settings.json should look below after adding the above block and click cmd+s/ctrl+s.

This will spin up a container for the MCP server from the docker image built. Make sure you have used the right image name on the above cline_mcp_settings.json.

In my case it is awslabs/aws-diagram-mcp-server

5. Run and Test your MCP server to see it in action

Now lets generate some cool AWS diagrams to see our MCP in action.

the prompt I will write to the LLM

generate an AWS diagaram

an ASG and a RDS in private subnet. an ALB in front of the ASG as its target group and Route53 DNS to ALB as backend. Route53 with https://example.com

You can track your total spent per prompt, tokens, Cache read/write while LLM will start the API request. That is the cool thing about Cline.

It might prompt you multiple times to approve based on the operation it performs. Click on Approve

Once the task is completed you will see a message like below with the details. As you see I spent total $0.0056 for my below request to generate the diagram.

Note

Docker based MCP will place files generated in the container's file path. So we need to copy the files from container to local to see this. In this case it is generated in /tmp/generated-diagrams/ and we have to copy using the below

docker cp <container_name>:/tmp/generated-diagrams generated-diagrams/

Top comments (0)