Are you looking to integrate artificial intelligence into your .NET APIs without a complete overhaul? Consider Semantic Kernel, a tool that allows smooth AI prompt integration with your application logic, adding intelligence and automation easily.

Semantic Kernel is an open-source AI orchestration library from Microsoft. It is model-agnostic, allowing you to choose your preferred models, whether self-hosted or third-party. It includes features like observability and strong security measures, such as filters to protect against prompt injection attacks, enabling you to build reliable, enterprise-grade applications effortlessly.

In this post, you'll learn how to:

- Set up Semantic Kernel in ASP.NET Core Web API

- Configure the model for the application

- Describe existing code to the AI Model

- Add a simple prompt endpoint that accepts user requests

- Leverage filters to implement logging for tracking the end-to-end request trail.

Step-by-step instructions

Step 1: Install Semantic Kernel

Add nuget package in your existing web API project

dotnet add package Microsoft.SemanticKernel

Step 2: Add Semantic Kernel to your web application builder

public static void Main(string[] args)

{

var builder = WebApplication.CreateBuilder(args);

// ...

// Add Kernel

builder.Services.AddKernel();

}

Step 3: Add model ( below code uses local model running using Ollama, if you are using 3rd party, set modelid and apikey, you don't need endpoint property)

// Add LLM

builder.Services.AddOpenAIChatCompletion(

modelId: "llama3.2:latest",

endpoint: new Uri("http://localhost:11434/v1"),

apiKey: "");

Step 4: Describe your existing code to AI model using function descriptors. Ensure the description matches the intent of the method and this is key for model to identify the right functions to call.

Because the LLMs are predominantly trained in python, use snake_case format for kernel function names and parameters.

Step 5: Register the above class as Kernel Plugin. You can register even HttpClient (Let's say your API is calling another API to fulfill request) as plugins.

// Add your existing services as Kernel plugins

builder.Services.AddTransient(serviceProvider =>

{

Kernel k = new(serviceProvider);

var productRepository = serviceProvider.GetRequiredService<IProductService>();

k.Plugins.AddFromObject(productRepository);

return k;

});

Step 6: Add a sample Prompt Endpoint and Invoke Kernel with the prompt

public class PromptController : Controller

{

private readonly Kernel _kernel;

public PromptController(Kernel kernel)

{

_kernel = kernel;

}

[HttpGet]

public async Task<IActionResult> HandlePromptAsync(string prompt)

{

OpenAIPromptExecutionSettings settings = new() { ToolCallBehavior = ToolCallBehavior.AutoInvokeKernelFunctions};

var result = await _kernel.InvokePromptAsync<string>(prompt, new(settings));

return Ok(result);

}

}

Step 7: Add FunctionFilter to see the request trail

public class FunctionFilter : IAutoFunctionInvocationFilter

{

public async Task OnAutoFunctionInvocationAsync(AutoFunctionInvocationContext context, Func<AutoFunctionInvocationContext, Task> next)

{

Console.WriteLine($"{nameof(FunctionFilter)}.FunctionInvoking - {context.Function.PluginName}.{context.Function.Name}");

await next(context);

Console.WriteLine($"{nameof(FunctionFilter)}.FunctionInvoked - {context.Function.PluginName}.{context.Function.Name}");

}

}

Step 8: Register the FunctionInvocationFilter

builder.Services.AddSingleton<IAutoFunctionInvocationFilter, FunctionFilter>();

Step 9: Let's test the endpoint (If you are using local model, make sure its up and running)

Prompt provided (create a product tablecloth worth 100$) and response is 200, product is created with name tablecloth and price is set to 100.

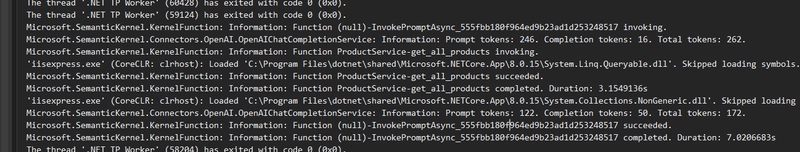

Here is the request audit trail.

Let's try retrieving the product

and request trail

Final Thoughts

That’s it! You’ve now extended your API with AI capabilities using Semantic Kernel. While AI can unlock powerful capabilities, it's important to remember that not every product needs AI. Use tools like Semantic Kernel when they genuinely help solve a customer problem or enhance the user experience. Start with the problem, not the technology—and let AI be a solution where it makes sense, not just a trend to follow.

Top comments (0)