It's been three months since I released the Telltale GitHub Pages site, automating the creation and analysis of different Gradle build experiments. Previously, running experiments required manual analysis and a companion article. This automation has added both flexibility and speed, enabling us to run more experiments more easily.

As a quick reminder, Telltale is a framework that automates the infrastructure to run Gradle builds across different variants. It works alongside Build Experiment Results to aggregate the data published to Develocity. In the latest iteration, I’ve added integration with the OpenAI API to analyze and compare experiment results.

While many experiments didn’t yield meaningful insights, this article highlights a few that did.

Kotlin 2.1.20 vs 2.1.0

- Analysis: https://cdsap.github.io/Telltale/posts/2025-03-21-cdsap-150-experiment/#summary

- Results: https://cdsap.github.io/Telltale/reports/experiment_results_20250317175549.html

In this routine experiment, updating to a patch version of the Kotlin Gradle Plugin, I didn’t observe significant differences in build duration, configuration time, or Kotlin compilation duration. However, one metric did stand out:

The IR translation phase of the Kotlin compiler increased significantly in 2.1.20. Although the overall compilation time remained similar, we reported the behavior in JetBrains' YouTrack. The root cause remains unclear—it’s possible the compiler is shifting work between phases, keeping total time stable.

Comparing -Xms usage

- Analysis: https://cdsap.github.io/Telltale/posts/2025-03-21-cdsap-149-experiment/summary

- Results: https://cdsap.github.io/Telltale/reports/experiment_results_20250321002356.html

The -Xms JVM flag defines the initial memory allocation pool. In Android builds, I initially assumed it wouldn't matter much, until I saw Jason Pearson submit a PR to nowinandroid enabling this flag for Gradle and Kotlin processes. It seemed like the perfect candidate for analysis.

The result: a 3.5% reduction in build time by setting the initial heap size.

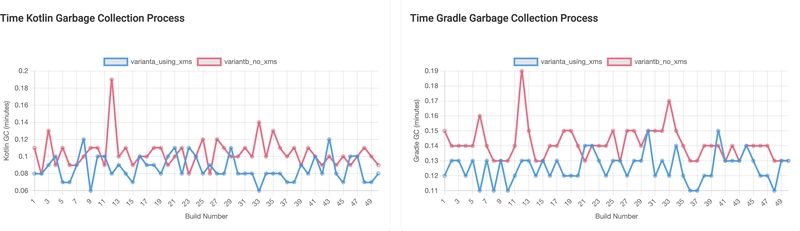

More interestingly, the number of garbage collection operations dropped significantly:

And naturally, GC duration also improved:

Bottom line: enabling -Xms led to more efficient memory usage and GC behavior. Thanks, Jason!

Reducing worker parallelization of the Kotlin compiler

- Analysis: https://cdsap.github.io/Telltale/posts/2025-03-18-cdsap-136-experiment/

- Results: https://cdsap.github.io/Telltale/reports/experiment_results_20250318005824.html

This experiment explored whether reducing Kotlin compiler parallelization, while keeping the overall Gradle worker pool unchanged, would impact build performance. In highly modularized projects, parallel compilation of many Kotlin modules can increase memory pressure on the system.

Since our experiments run on GitHub Actions runners with only 4 available workers, we’re constrained in testing more vertically scaled scenarios. Still, this setup allowed us to observe meaningful differences.

The overall build duration—in both mean and median—remained nearly identical between the two configurations. This indicated that reducing Kotlin parallelism did not negatively impact the total build time.

However, two key observations stood out:

The aggregated Kotlin compiler duration remained the same, showing no major speed-up in the Kotlin phase itself.

The memory usage of the Gradle process decreased slightly:

More notably, the task app:mergeExtDexDemoDebug, one of the most expensive in the build, improved by 10.3% when Kotlin compiler parallelization was reduced:

This suggests that reducing Kotlin parallelization can relieve memory pressure, enabling better performance for unrelated Gradle tasks. The Kotlin compiler might be competing for resources with other parts of the build, so reducing its concurrency can help other tasks run more efficiently.

Parallel vs G1

- Analysis: https://cdsap.github.io/Telltale/posts/2025-06-22-cdsap-194-experiment/

- Results: https://cdsap.github.io/Telltale/reports/experiment_results_20250622042015.html

In a previous experiment, we compared Parallel GC and G1 GC in the context of the nowinandroid project and observed that Parallel GC consistently outperformed G1. However, nowinandroid is a relatively lightweight project, and we wanted to validate whether those results held in a large build.

For this experiment, we used a project with 400 modules, which demands significantly more heap during compilation phase. The results confirmed our hypothesis:

Parallel GC was still faster, with a ~6% reduction in build time (around 55 seconds) compared to G1 GC:

Tasks like Kotlin compilation and DEX merging saw consistent performance gains when using Parallel GC:

Even at the process level, the main Gradle process showed better performance and resource efficiency under Parallel GC:

This experiment reinforces that Parallel GC continues to perform better even in larger Android projects with high memory demands. However, it’s important to note that these results are specific to CI environments, where consistent throughput and short-lived processes are key.

If you're tuning a local development environment, G1 GC may still be preferable, as it tends to reclaim OS memory more efficiently, which can improve system responsiveness.

Ultimately, you should run these experiments in the context of your own project—the optimal GC strategy can vary based on project size, memory constraints, and whether you're building locally or in CI.

Other experiments

As we mentioned, not all the experiments are going to show regressions and serve as a safety net to verify that the build performance is not impacted, some of them are:

- Comparing AGP 8.9 vs 8.10.1 https://cdsap.github.io/Telltale/posts/2025-06-09-cdsap-189-experiment/

- Gradle 9.0.0-RC1 vs 8.14.2: https://cdsap.github.io/Telltale/posts/2025-06-19-cdsap-192-experiment/

Other experiments didn't confirm our initial hypothesis of reducing build duration based on different configurations

- Using R8 in a different process with 4gb and G1 https://cdsap.github.io/Telltale/posts/2025-03-26-cdsap-152-experiment/

Final words

With this, we have completed the first review of experiments during these three months of automating the execution and analysis with Telltale. We will publish a new article in the next months with the results of more experiments.

Happy Building

Top comments (0)