The artificial intelligence landscape is undergoing a profound transformation as we move beyond the limitations of text-only systems. Multimodal AI—the technology that can seamlessly process, understand, and generate content across different data types—has emerged as one of the most significant developments in the field. Unlike traditional single-modality systems that operate within isolated data silos, multimodal AI integrates diverse information streams to develop a more comprehensive understanding of the world, much closer to how humans naturally perceive and interact with their environment.

This evolution represents a critical milestone in AI development, enabling systems to bridge the gap between different forms of information and create more natural, intuitive, and powerful applications. As we navigate through 2025, multimodal AI has moved from research laboratories into mainstream applications, fundamentally changing how we interact with technology.

Understanding Multimodal Data Types

Multimodal AI systems work with diverse data types, each bringing unique challenges and opportunities:

Text, Image, Audio, Video, and Sensor Data

- Text: Natural language in various forms (documents, conversations, social media)

- Images: Static visual information from photos, diagrams, charts, and other graphical representations

- Audio: Speech, music, environmental sounds, and acoustic patterns

- Video: Dynamic visual information combined with temporal elements

- Sensor data: Information from IoT devices, wearables, environmental monitors, and more

Each modality contains unique information that, when combined, provides a richer understanding than any single data type alone.

Challenges in Processing Heterogeneous Data Sources

Processing diverse data types presents several significant challenges:

- Representation disparities: Different modalities have vastly different natural representations (vectors, sequences, graphs)

- Temporal alignment: Synchronizing information across time-based modalities

- Scale variations: Managing different volumes and density of information across modalities

- Missing data handling: Accounting for incomplete information in some modalities

- Computational efficiency: Balancing resources across multiple processing streams

These challenges require specialized techniques to overcome, as traditional single-modality approaches often fail when applied to multimodal data.

Cross-modal Relationships and Integration Approaches

The true power of multimodal AI emerges through understanding relationships between modalities:

- Complementary information: Different modalities providing supporting details (e.g., image captions adding context to visual content)

- Redundant information: The same concept represented across multiple modalities, enhancing reliability

- Conflicting information: Contradictions between modalities requiring reconciliation

- Emergent patterns: Insights visible only when considering multiple modalities together

Successful multimodal systems must identify and leverage these cross-modal relationships to build a coherent understanding that transcends individual data types.

Architectural Innovations in Multimodal AI

Recent architectural innovations have dramatically improved multimodal AI capabilities:

Fusion Techniques (Early, Late, and Hybrid)

Modern systems employ several approaches to combining information across modalities:

-

Early fusion: Combining raw or lightly processed inputs before main processing

- Advantages: Captures low-level interactions, simpler architecture

- Limitations: May be dominated by one modality, higher computational demands

-

Late fusion: Processing modalities separately and combining only at decision level

- Advantages: Modality-specific optimization, computational efficiency

- Limitations: Misses cross-modal interactions, requires separate expertise

-

Hybrid fusion: Multiple integration points throughout the processing pipeline

- Advantages: Balances cross-modal interaction with computational efficiency

- Limitations: More complex to design and train, requires careful architecture planning

Cross-Attention Mechanisms

Cross-attention has emerged as a powerful technique for multimodal integration:

- Bidirectional attention flows: Allowing each modality to query and attend to others

- Modality-specific attention heads: Specialized attention mechanisms for different data types

- Multi-head cross-modal attention: Capturing different relationship types simultaneously

- Hierarchical attention structures: Processing at multiple levels of abstraction

These mechanisms enable models to dynamically focus on relevant information across modalities, significantly enhancing their ability to understand complex relationships.

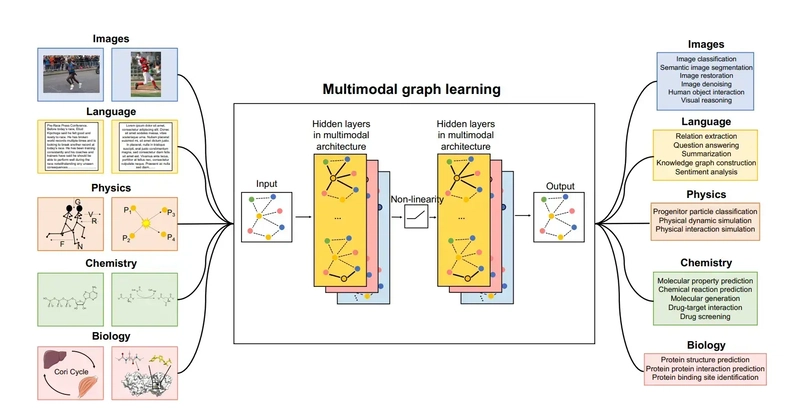

Graph-Based Multimodal Representations

Graph structures have proven particularly effective for representing multimodal data:

- Heterogeneous graphs: Different node and edge types representing various modalities

- Cross-modal edges: Explicit representation of relationships between modalities

- Attention-weighted graphs: Dynamic adjustment of edge importance based on context

- Hierarchical graph structures: Representing information at multiple levels of abstraction

Graph-based approaches naturally accommodate the complex, non-sequential relationships present in multimodal data, enabling more sophisticated reasoning and understanding.

Leading Multimodal Models in 2025

Several flagship models have defined the state of multimodal AI in 2025:

GPT-4o and Beyond

OpenAI's GPT-4o represents a significant advancement in multimodal capabilities:

- Seamless processing of text, images, and audio in a unified framework

- Enhanced reasoning capabilities across modalities

- Real-time audio processing enabling natural conversational interfaces

- Sophisticated visual reasoning and analysis

- The recently released GPT-4o Advanced extends these capabilities with enhanced resolution processing and temporal understanding

Claude 3.5 Opus

Anthropic's Claude 3.5 Opus has established new benchmarks for multimodal systems:

- Industry-leading visual understanding and reasoning

- Advanced document analysis with spatial awareness

- Nuanced understanding of charts, graphs, and technical diagrams

- Strong performance on multimodal reasoning benchmarks

- Enhanced context window allowing for processing of lengthy multimodal documents

Gemini Pro/Ultra Enhancements

Google's Gemini family has evolved with significant multimodal improvements:

- Native video understanding capabilities

- Real-time multimodal processing for interactive applications

- Enhanced scientific and mathematical reasoning across modalities

- Integration with specialized tools and APIs for expanded capabilities

- Domain-specific optimizations for enterprise applications

Emerging Open-Source Alternatives

The open-source ecosystem has made remarkable progress in multimodal AI:

- LLaVA-Next: Combining powerful open-source language capabilities with advanced vision features

- ImageBind-LLM: A novel approach to binding multiple modalities in a unified representation space

- MultiModal-GPT: An extensible framework for building custom multimodal applications

- OmniLLM: Focusing on efficiency and performance for edge deployment of multimodal capabilities

Real-World Applications Transforming Industries

Multimodal AI is driving transformation across numerous industries:

Healthcare Diagnostics and Monitoring

The medical field has seen particularly impactful applications:

- Multimodal diagnostic systems integrating medical images, patient records, genomic data, and clinical notes

- Remote patient monitoring combining visual observations, vital sign data, and patient-reported symptoms

- Surgical assistance tools processing visual data and instrument telemetry in real-time

- Mental health assessment analyzing speech patterns, facial expressions, and linguistic content

These applications are enhancing diagnostic accuracy, improving patient outcomes, and expanding healthcare access.

Autonomous Vehicles and Robotics

Multimodal perception is essential for physical systems navigating the real world:

- Sensor fusion combining camera, lidar, radar, and ultrasonic data for comprehensive environmental awareness

- Multimodal safety systems integrating visual scene understanding with audio detection of emergency vehicles

- Human-robot interaction processing verbal commands, gestures, and environmental context

- Anomaly detection identifying unusual patterns across multiple sensor streams

These capabilities enable more reliable, safe, and intuitive autonomous systems.

Content Creation and Analysis

Creative industries have been revolutionized by multimodal AI:

- Automated content production generating cohesive text, images, and design elements

- Multimodal search and discovery finding content based on complex queries spanning different media types

- Content moderation identifying problematic material across text, images, audio, and video

- Audience engagement analysis correlating content characteristics with user responses across platforms

These tools are enhancing creative workflows and enabling new forms of media production and consumption.

Enhanced Customer Experiences

Businesses are leveraging multimodal AI to transform customer interactions:

- Omnichannel customer service maintaining context across text, voice, and visual communication

- Interactive shopping experiences combining visual product recognition with conversational interfaces

- Personalized recommendations based on multimodal preference signals and behavior patterns

- Accessibility enhancements translating between modalities for users with different needs and preferences

These applications are creating more natural, intuitive, and effective customer experiences across industries.

Development and Implementation Considerations

Implementing multimodal AI systems presents unique challenges:

Data Preparation and Preprocessing Challenges

Effective multimodal systems require careful data management:

- Alignment and synchronization across time-based modalities

- Standardization of diverse data formats and scales

- Missing data handling strategies for incomplete multimodal datasets

- Annotation complexity for establishing ground truth across modalities

- Dataset bias mitigation across different data types

These challenges require specialized pipelines and tools adapted to multimodal data characteristics.

Computational Requirements and Optimization

Multimodal systems typically demand significant computational resources:

- Hardware requirements often exceed single-modality systems

- Memory management becomes critical with multiple data streams

- Inference optimization techniques like modal-specific quantization

- Selective processing activating only relevant modalities based on inputs

- Tiered architectural approaches balancing performance and efficiency

Effective optimization strategies are essential for practical deployment of multimodal systems.

Evaluation Metrics for Multimodal Systems

Assessing multimodal AI performance requires specialized evaluation approaches:

- Cross-modal coherence measuring consistency between modalities

- Task-specific benchmarks for multimodal capabilities

- Human evaluation protocols for assessing natural interaction quality

- Robustness testing across varying modal quality and availability

- Fairness assessment across demographically diverse data

Comprehensive evaluation frameworks must address both individual modal performance and integrated capabilities.

Future Directions

Several emerging developments are shaping the future of multimodal AI:

Research Breakthroughs on the Horizon

Key research directions include:

- Multimodal few-shot learning reducing data requirements for new tasks

- Cross-modal knowledge transfer leveraging information from data-rich modalities to enhance understanding in others

- Compositional multimodal reasoning building complex understanding from primitive elements across modalities

- Interactive multimodal learning incorporating real-time human feedback across different communication channels

- Self-supervised multimodal pretraining reducing dependence on labeled data

These advancements promise to address current limitations and expand multimodal capabilities.

Ethical Considerations in Multimodal AI

Important ethical challenges include:

- Deepfake proliferation as generation capabilities improve

- Privacy implications of processing multiple data streams

- Accessibility and inclusion ensuring benefits across diverse user populations

- Transparency and explainability for complex multimodal decision processes

- Bias amplification across interconnected modalities

Addressing these concerns requires both technical solutions and governance frameworks.

The Path Toward Truly Integrated Understanding

The ultimate goal of multimodal AI research is systems that:

- Process information holistically rather than as separate streams

- Build unified conceptual representations spanning all modalities

- Reason fluidly across different types of information

- Generate coherent outputs integrating multiple modalities

- Interact naturally with humans through their preferred communication channels

Progress toward this goal continues to accelerate as architectural innovations and computational capabilities advance.

Conclusion

Multimodal AI represents one of the most significant developments in artificial intelligence, moving systems closer to human-like perception and understanding of the world. By breaking down the silos between different data types, these systems can develop richer, more contextual understanding and generate more natural, effective outputs.

For developers, embracing multimodal AI opens new possibilities for creating applications that interact more naturally with users and process information more comprehensively. The challenges are substantial, but the potential rewards—in terms of enhanced capabilities, improved user experiences, and new application domains—are transformative.

As we look ahead, multimodal AI will continue to evolve, with systems becoming increasingly adept at integrating diverse information streams and reasoning across modalities. This evolution will enable new classes of applications that blend seamlessly into how humans naturally communicate and understand the world.

Top comments (0)