Alex: I am planning date with my girlfriend. Could you give any advice?

AstroGPT: To sprinkle some starry magic on your plans, I need to know your girlfriend's zodiac sign or birth date. This will help me craft the perfect romantic advice just for you two! ✨

Alex: She is an Aries.

AstroGPT: For your date with your adventurous Aries girlfriend, I suggest planning something that combines excitement and spontaneity! How about a surprise picnic in a scenic spot or an exhilarating outdoor activity? This will definitely spark that fiery Aries enthusiasm! Enjoy the cosmic vibes of your date! 🌟✨

Telegram bots are among the lowest-friction apps you can build today. Type /start and the bot is ready - no extra install, no sign-up forms. This zero-friction setup, together with Telegram’s rich features, makes bots handy for both users and developers.

Bots can handle many tasks: quick support chats for shops and clinics, daily weather or news updates, games, finance trackers. It suits for almost anything you can pack into a chat. Some bots are used as private corporate tools, while others are publicly available serving thousands of users every day.

How a bot really works

Put simply, a Telegram bot is a web service that listens to the Bot API. When a user sends a message:

- Telegram posts the update to the bot’s server.

- The server processes the input and replies to Telegram.

- Telegram delivers the response to the user.

In this post, I'll walk you through the main parts of a typical Telegram bot backend, using my latest project as a reference, and show how large language models (LLMs) can help build better chatbots.

Bot business logic

Classic Telegram bots rely on strict string matching:

- A predefined list of commands maps to handler functions.

- Regular expressions extract structured data such as dates, names, or email addresses from user input.

Bots can also add on-screen buttons to simplify input, but users still have to send the exact text, any wrong character can break the logic.

Large bots are usually split into small, separate “dialog flows” connected to a central router. Each flow is a mini state machine: it knows the user’s current step, what data has been collected, and which messages or commands are valid next. The router inspects every update (text, button press, or command), chooses the right dialog-flow and state, and forwards the update to the correct handler. State data typically lives in a database such as Redis, MongoDB, or SQL.

Improve input with LLMs

In the past, you had to train a custom NLP model for intent recognition. Now large language models (LLMs) can infer intent directly from free-form text with a simple prompt. An LLM can parse a message and return structured data in JSON, making it easy to interpret free-form user input.

My sandbox project: AstroGPT

While experimenting with LLM-powered apps, I needed a safe way to practise building production-ready Python applications. I decided to build a (pseudo)astrology Telegram bot. It’s simple (and honestly pretty useless) but perfect for testing architecture ideas.

The bot has only two dialogs:

- Registration – collects birth date and consent.

- Main chat – lets the user update their data or ask, “What do the stars say today?”

After a user finishes registration and subscribes, the bot uses an LLM to generate a short horoscope and sends it automatically.

Initial implementation

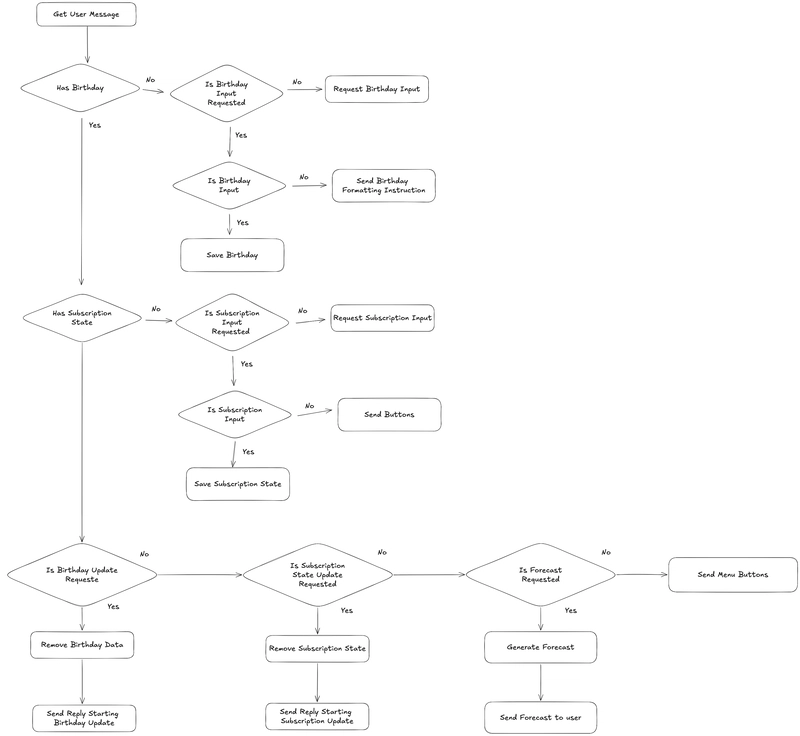

Because I wanted human-like free-form interactions, the usual telegram libraries mapping input-to-handlers didn’t work. I implemented the flow myself with plain if-else logic. The diagram below shows the initial dialog flowchart.

The Is Birthday Input step uses an LLM to extract the user’s birth date from a message. The birthday parser has two parts: a parsing prompt and a schema that describes the output JSON.

class BirthdayValidation(BaseModel):

birthday_text: str = Field(description="The birthday of the user in text format")

extraction_error: Optional[str] = Field(

description="Message to display if the birthday could not be extracted"

)

parse_date_of_birth_prompt = """

<instruction>

Please, analyze the following text inside of text_input tag and extract birthday information in text format.

Format output according to instructions in output_formatting_guidelines tag.

</instruction>

<text_input>

{user_input}

</text_input>

<output_formatting_guidelines>

Format you reply according to {format_instructions}

If you do not find readable birthday information, please provide a message in extractionError which should aks user to input birthday in readable format.

</output_formatting_guidelines>

"""

The schema is defined with LangChain’s Pydantic extension. Each field gets a name, type, and short description. LangChain turns this schema into formatting instructions, injected into the prompt as {format_instructions}. The raw {user_input} is added as well. I wrap both parts in simple XML-style tags so my instructions stay clearly separated from the user’s text.

The next diagram shows logic of the feature-complete application.

More advanced prompts

While testing parsing prompts, I realised I could merge extraction of several values into one request and cut the total number of LLM calls. In normal conversation, users often provide all the details in a single message anyway. This change both simplifies the code and makes the chatbot feel more natural.

After some experiments I settled on the following JSON schema and prompt:

class CollectDataParser(BaseModel):

user_birthday_text: Optional[str] = Field(

description="The birthday of the user in text format, birthday should be in free for format, but it should be reasonable date of birth."

)

language: Optional[str] = Field(

description="Desired language option of the user. Do not consider language already available in user information. Convert it to single English word like in list of supported languages"

)

is_data_about_user: bool = Field(

description="Based on input and context of previous conversation, is the data about the user or about someone else?"

)

parsing_feedback: str = Field(

description="Explanation of data extraction result with clarification of errors is needed"

)

collect_data_prompt = """

<instruction>

You are a part of logic in personal astrologist bot.

Your should collect information from user to provide astrologic forecast.

You have to be completely sure that it is information about the user, since user can also mention other people in the conversation.

You do not support any other actions and do not engage conversation beyond collecting necessary information.

Provide user with kind and clear feedback on any issues with the data provided.

Ask user for input if some information is missing or unclear.

If users asks something beyond the data collection, just ignore it.

previous_conversation tag contains log of previous conversation with user.

user_information tag contains user information.

Do not extract information if it exactly matches the information already available in user_information.

last_user_input tag contains last user input.

output_formatting_guidelines tag contains instructions on how to format your reply.

</instruction>

<user_information>

Name: {user_name}

Birthday: {user_birthday}

Subscription: {user_subscription}

Language: {user_language}

</user_information>

<previous_conversation>

{previous_conversation}

</previous_conversation>

<last_user_input>

{user_input}

</last_user_input>

<output_formatting_guidelines>

Please, extract user information from the user_input.

Provide parsing feedback if any issues with the data extraction.

Do not extract information if it exactly matches the information already available in user_information.

Format you reply according to {format_instructions}

</output_formatting_guidelines>

"""

Leveraging LLM reasoning

As the number of dialogs grew, the application logic expanded quickly and adding new features became hard. The user experience still felt robotic—more like an upgraded CLI than a chat.

To cut down the prompt count, I let the LLM handle the reasoning instead of using deep if-else blocks. The change worked surprisingly well: it simplified the design and made conversations flow more natural. After a few iterations, I settled on the approach below.

Step 1

The “menu” prompt receives the latest user message, recent chat history, user data, a list of available actions, and summary of actions already taken for this input. The LLM just has to pick the next action.

class MenuActions(str, Enum):

update_user_data = "update_user_data"

subscribe = "subscribe"

unsubscribe = "unsubscribe"

request_input_clarification = "request_input_clarification"

reply_to_user_with_result = "reply_to_user_with_result"

ask_for_missing_user_data = "ask_for_missing_user_data"

get_situational_advice = "get_situational_advice"

get_joke_about_astrology = "get_joke_about_astrology"

get_daily_forecast = "get_daily_forecast"

class SelectedAction(BaseModel):

selected_action: MenuActions = Field(

description="Decision for future action to take"

)

context_for_action_execution: str = Field(

description="Action will be executed by specialized handler, provide just brief context necessary to execute it properly."

)

message_to_user: Optional[str] = Field(

description="Only provide message to the user if it is terminal action which imply communication with the user, otherwise leave it empty."

)

def __str__(self) -> str:

return f"Action: {self.selected_action}, Description: {self.context_for_action_execution} {self.message_to_user if self.message_to_user else ''}"

menu_prompt = """

<instruction>

You are a part of logic in personal astrologist bot.

You should handle a user input and make a decision on the next action to take.

You should should not act on the decision, but provide a decision for the next action to take.

You can provide details to help future actions with more context.

You support following actions:

- Update user data, even if it is already provided e.g. name, birthday, language (update_user_data action), keep in mind you should be sure that the data belongs to the user, it should not be data of other people mentioned in the conversation.

- Subscribe to daily forecast, daily forecast is only available by subscription

- Unsubscribe from daily forecast

- Ask user for input if any action failed

- Ask user for input if any request is unclear

- Send message to user if user goal is achieved

- Supply user with an information regarding service you can provide

- You can also provide information about users state if it is requested

- Provide user with a short astrological advice if user ask about things like mood, outfit, love, work, food choices etc. user (get_situational_advice action)

- Give user a short clarification of the latest daily forecast regarding certain situation or particular details (get_situational_advice action)

- If user specifically ask forecast for today (call get_daily_forecast to leverage specific logic)

- Joke about astrology (finish conversation if joke is already generated)

You do not support any other actions.

Provide user with kinda and clear feedback on service your can provide.

Clarify if user wants general daily forecast or specific advice on particular aspect of life.

processing_steps tag contains log of actions already taken during handling of current user input.

Information in the steps is not communicated to user, it is internal log of actions taken during handling of user input.

Reply user as soon as you have enough information to fulfill user request or if you need more information from user.

Ask user for input if any step in processing_steps failed.

Finish the conversation immediately if you have enough information to reply to user.

Do not select the same action if it was successfully executed.

previous_conversation tag contains log of previous conversation with user.

user_information tag contains user information.

One of your goal is to collect missing information in user_information.

Do not ask user for information already available in user_information.

last_user_input tag contains last user input.

output_formatting_guidelines tag contains instructions on how to format your reply.

</instruction>

<processing_steps>

{processing_steps}

</processing_steps>

<previous_conversation>

{previous_conversation}

</previous_conversation>

<user_information>

Name: {user_name}

Birthday: {user_birthday}

Subscription: {user_subscription}

Language: {user_language}

</user_information>

<last_user_input>

{user_input}

</last_user_input>

<output_formatting_guidelines>

Please, provide a decision for future action to take and necessary context for future action.

Format you reply according to {format_instructions}

</output_formatting_guidelines>

"""

Step 2

The chosen action is executed, it might call another LLM, hit a third-party API, or write to the database. When it finishes, it appends a description of execution result as an entry to the log of executed actions.

class AdviceResult(str, Enum):

advice = "advice"

clarification = "clarification"

class AdviceParser(BaseModel):

advice_result: AdviceResult = Field(

description="If you have enough information to provide advice, select advice. If you need more information from the user, select clarification"

)

result_description: Optional[str] = Field(

description="Description of astrological advice or clarification for the user. Do not provide advice if anything is unclear. Do not ask too many questions, you are astrological bot, stars should guid your decisions, so improvise more!"

)

situation: Optional[str] = Field(

description="Description of the situation for which the advice is given"

)

advice_parser = PydanticOutputParser(pydantic_object=AdviceParser)

advice_prompt = """

<instruction>

You are a personal astrologist bot.

You should an provide user with an astrological advice based on the situation provided or clarify latest daily forecast.

Mention zodiacal signs of other people involved and provide user with a clear recommendation in relation to the situation provided and signs mentioned.

Be more descriptive in your advice, feel free to suggest exact activities or actions to take.

Tell user advice considering the situation provided and zodiacal signs of user and potentially other people involved in the situation.

Do not repeat daily forecast exactly, but provide clarification if needed.

Ask user for more details on situation if it help to provide more accurate advice.

Ask zodiacs or birth dates of people involved in the situation.

Provide user with kind and clear recommendation.

Ask user for input if some information is missing or unclear.

Keep it short, concise and consistent with context of previous advices, forecast and messages.

previous_conversation tag contains log of previous conversation with user.

user_information tag contains user information.

previous_advice tag contains log of previous advices provided to user.

previous_forecast tag contains log of previous daily forecasts provided to user.

last_user_input tag contains last user input.

output_formatting_guidelines tag contains instructions on how to format your reply.

</instruction>

<user_information>

Name: {user_name}

Birthday: {user_birthday}

Subscription: {user_subscription}

Language: {user_language}

</user_information>

<previous_conversation>

{previous_conversation}

</previous_conversation>

<last_user_input>

{user_input}

</last_user_input>

<previous_forecast>

{previous_forecast}

</previous_forecast>

<previous_advice>

{previous_advice}

</previous_advice>

<output_formatting_guidelines>

It should be a short and concise advice. Max 2 sentences.

Format you reply according to {format_instructions}

</output_formatting_guidelines>

"""

Step 3

Steps 1 and 2 repeat until threshold is reached or the "menu" prompt selected "reply_to_user_with_result". A final prompt then processes the full action log, user data, and the original input to generate the reply, which is sent back to the user.

reply_user_prompt = """

<instruction>

You are a personal astrologist bot, your name is Zaira and you use cosmic energy to help people with their problems.

You should reply user on his latest message summarizing actions which were taken.

Please, be, playful, witty and charming.

</instruction>

<user_information>

User name: {user_name}

</user_information>

<previous_conversation>

{previous_conversation}

</previous_conversation>

<last_user_input>

{user_input}

</last_user_input>

<actions_taken>

{actions_taken}

</actions_taken>

<output_formatting_guidelines>

Reply with a message that summarizes actions taken and results of those actions.

Omit intermediate steps and provide only final results.

Do no explain the actions taken, just provide the feedback in a clear and concise conversation manner.

Do no greet user, since the message is a part of ongoing conversation.

You should not provide user with any additional information or forecast beyond the actions taken.

Please, be brief, friendly, clear, supportive and use chat style language, avoid mentioning details of decision making process.

Never mention anything about Natal Charts, they are not supported by the bot.

Avoid usage of characters and brand names which could be subject to copyright.

Consider messages from previous_conversation tag in your reply, but do not repeat information from them again in your reply.

</output_formatting_guidelines>

"""

Step 0

Before all business-logic steps, I added a safety step that spots abnormal behaviour. A prompt reviews recent messages and returns three fields:

- A confidence score

- A warning type

- A warning explanation

The score lets the main logic tune the detector’s sensitivity. Each warning type can trigger different follow-up actions, and repeated warnings can make the bot block certain features to prevent abuse. The explanation text is used when composing a reply to the user.

class UnintendedBehaviorDetector(BaseModel):

confidence: float = Field(

description="Level of warning to be issued to the user. 0.0 means no warning, 1.0 means clear evil intent. Increase confidence for repeating behavior."

)

warning: Optional[WarningType] = Field(description="Type of warning to be issued")

warning_explanation: Optional[str] = Field(

description="Explanation of the warning to be issued"

)

def __str__(self) -> str:

return f"Warning: {self.warning}, Confidante: {self.confidence} Explanation: {self.warning_explanation} "

Recap

Earlier, when we covered classic Telegram bots, we looked at the MVP flowchart. The diagram below shows the version based on LLM-reasoning, which adds features the MVP lacked. The new bot is more fluent: it answers general questions, cracks jokes, and even offers pseudo-astrological advice.

Despite these extras, the full flowchart is only about the size of the single “birthday input” branch in the old if-else design. The LLM-reasoning works surprisingly well—the bot handles complex queries and keeps the dialog human-like. Moreover, adding a feature means just writing one small action and tweaking the main menu prompt.

🚀 Code: Github repo

Top comments (0)