Introduction

Machine Learning models are rapidly transitioning from experimental stages to real-world applications. The journey from a fully trained model to functioning application involves several steps, the most significant being deployment. AWS SageMaker simplifies this process by offering a managed platform for building, training, and deploying models. It supports real-time predictions, manages dynamic workloads, and integrates seamlessly with other services.

This article guides you through the process of deploying a machine learning model, specifically a .pkl (Pickle) file onto AWS SageMaker and connecting it to a frontend application for real-time predictions.

By the end, you'll understand how to deploy a trained model on SageMaker and integrate it with a frontend application.

Diagrammatic Representation

Prerequisites

AWS Management Console

A trained model

Before we dive into the deployment process, make sure your model is saved as a .pkl file and then compressed into a '.tar.gz' format. If you don’t have your model in this format yet, use the following code snippet in your Jupyter notebook to save and compress your model:

import joblib

import tarfile

model = model_name #Enter your model name here

joblib.dump(model, 'model.pkl') #Saving it as .pkl file

with tarfile.open('model.tar.gz', 'w:gz') as tar: #Compressing into tar.gz

tar.add('model.pkl')

files.download('model.tar.gz') #Downloading the file locally

Setup Steps

1. Sign-in to the AWS Management Console and navigate to S3

2. Create a S3 bucket

a) Click create bucket

b) Enter a unique bucket name (e.g. 'mymlbucket') and then create it!

c) Go to your bucket and upload your 'model.tar.gz' file

3. Go to SageMaker

Open SageMaker console and set up a SageMaker domain.

Follow the steps below to create one

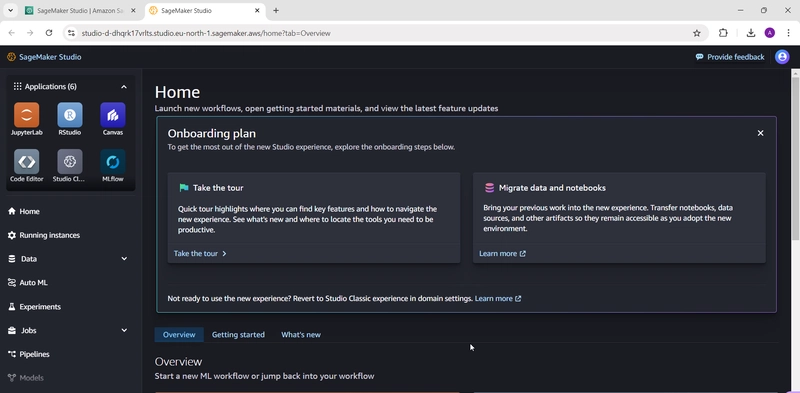

4. Launch SageMaker Studio

We'll use SageMaker Studio to create our own JupyterLab workspace.

Click on Studio and then click Open Studio.

This will take you to the SageMaker Studio console.

Navigate to JupyterLab and create your own workspace.

Now you're all set to begin the deployment process in SageMaker Studio!

Deploying the model

1. Install the SageMaker Python SDK

We start by installing SageMaker Python SDK in our notebook and configuring it with an IAM role to access S3. Follow these steps:

!pip install sagemaker #This command installs SageMaker Python SDK

2. Import SageMaker and Configure the Session

Next, import the SageMaker library and setup the session using IAM role

import sagemaker

from sagemaker.sklearn.model import SKLearnModel

sagemaker_session = sagemaker.Session()

role = 'arn:aws:iam::account_id:role/service-role/AmazonSageMaker- ExecutionRole'

Now, we come to one of the most essential parts— the 'inference.py' script. This script defines how the input data will be processed during inference. It comprises several key components such as loading the model, preprocessing inputs (like converting JSON inputs to formats such as DataFrames), predicting the data, formatting the output, and most importantly, handling errors.

import json

import joblib

import os

import numpy as np

import pandas as pd

def model_fn(model_dir):

model = joblib.load(f"{model_dir}/model.pkl") # Loading the model

return model

def input_fn(request_body, request_content_type):

if request_content_type == 'application/json':

input_data = json.loads(request_body)

# Ensuring correct data types for the input

input_data['Store'] = np.int64(input_data['Store'])

input_data['Holiday_Flag'] = np.int64(input_data['Holiday_Flag'])

input_data['Month'] = np.int32(input_data['Month'])

input_data['DayOfWeek'] = np.int32(input_data['DayOfWeek'])

input_data['Temperature'] = np.float64(input_data['Temperature'])

input_data['Fuel_Price'] = np.float64(input_data['Fuel_Price'])

input_data['CPI'] = np.float64(input_data['CPI'])

input_data['Unemployment'] = np.float64(input_data['Unemployment'])

input_df = pd.DataFrame([input_data]) # Converting input to DataFrame

expected_features = ['Store', 'Holiday_Flag', 'Temperature', 'Fuel_Price', 'CPI', 'Unemployment', 'Month', 'DayOfWeek']

input_df = input_df[expected_features]

return input_df

else:

return None

def predict_fn(input_data, model):

prediction = model.predict(input_data) # Making predictions

return prediction

def output_fn(prediction, response_content_type):

result = {'Predicted Weekly Sales': prediction[0]}

return json.dumps(result)

3. The above steps helped us in setting up the environment and configuring the role. Now it is time to DEPLOY!

I am using the following code snippet for this. This code loads the model from S3 and we specify entry point script for inference

from sagemaker.sklearn.model import SKLearnModel

model_uri = 's3://bucket_name/model.tar.gz' # Bucket that has the model

entry_point = 'inference.py' # Entry point script

model = SKLearnModel(

model_data=model_uri,

role=role,

entry_point=entry_point,

framework_version='1.2-1', # scikit-learn version

sagemaker_session=sagemaker_session

)

predictor = model.deploy(

instance_count=1,

instance_type='ml.m5.large'

)

4. After successfully completing the deployment, endpoint URL can be retrieved from SageMaker Studio console. This endpoint URL is essential for testing your model and integrating it with your frontend.

5. It's time to TEST !! We'll us this endpoint URL to test our model. Use the following code snippet. Make sure to change the input data attributes as per your model

import boto3

import json

# Initialize the SageMaker runtime client

sagemaker_runtime = boto3.client('sagemaker-runtime')

# Input data

input_data = {

'Store': 41,

'Month': 12,

'DayOfWeek': 17,

'Holiday_Flag': 0,

'Temperature': 24.31,

'Fuel_Price': 44.72,

'CPI': 119.0964,

'Unemployment': 5.106

}

# Convert input data to JSON string

payload = json.dumps(input_data)

# Invoke the SageMaker endpoint

response = sagemaker_runtime.invoke_endpoint(

EndpointName='sagemaker-scikit-learn-2024-08-18-09', # Replace with your actual endpoint name

ContentType='application/json',

Body=payload

)

# Parse and print the response

result = json.loads(response['Body'].read().decode())

print(f"Predicted Weekly Sales: {result['Predicted Weekly Sales']}")

6.And just like that, your model has been successfully deployed ! Now we can take it further by connecting it with AWS Lambda and API gateway to display predictions onto the frontend applications!

Connecting SageMaker to Lambda

1. Navigate to AWS Lambda

Click 'Create function' and use Python as runtime language

2. Set up IAM role:

It is necessary to provide right set of permissions to Lambda, these include access to AWS SageMaker and API gateway

3. After creating the function, use the following code snippet to connect it with Lambda

```python

import boto3

import json

def lambda_handler(event, context):

print("Event:", event)

if 'body' not in event:

return {

'statusCode': 400,

'body': json.dumps({'error': "'body' missing in the event"})

}

sagemaker_runtime = boto3.client('runtime.sagemaker')

try:

response = sagemaker_runtime.invoke_endpoint(

EndpointName='sagemaker-scikit-learn-2024-08-18-09',

ContentType='application/json',

Body=json.dumps(input_data)

)

result = json.loads(response['Body'].read().decode())

return {

'statusCode': 200,

'headers': {'Content-Type': 'application/json'},

'body': json.dumps({'predicted_sales': result})

}

except Exception as e:

return {

'statusCode': 500,

'body': json.dumps({'error': str(e)})

}

```

4. Deploy the function ! You have successfully connected AWS SageMaker and Lambda!

Now, with the connection established, proceed with connecting it to API Gateway and integrating it with your frontend application as your next steps.

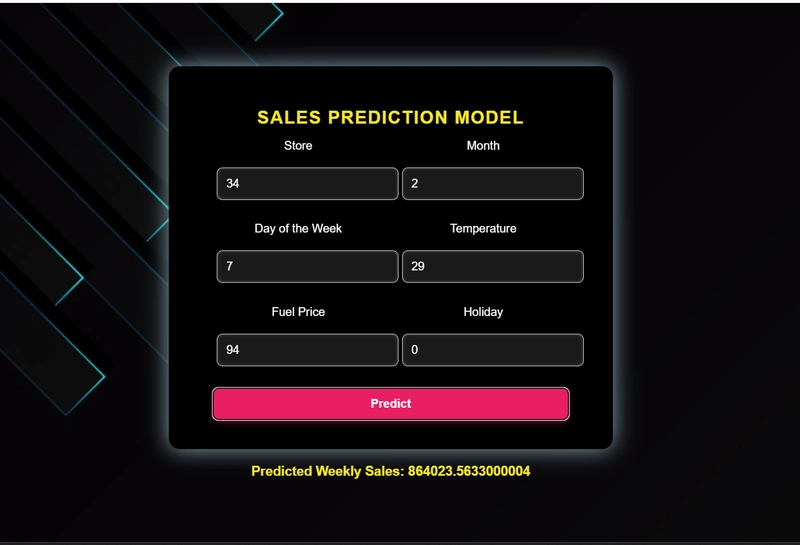

Frontend in Action

After successful connection, you'll be able to see your frontend receiving the predictions! Here's mine!

Conclusion

Deploying a machine learning model on AWS SageMaker bridges the gap between development and production. The above steps should help you navigate your way to deploying your first model !

If you encounter any issues or need additional insights during the deployment process, CloudWatch is your go-to tool for monitoring and troubleshooting!

Top comments (0)