Cursor has shaken up its Pro plan recently. The new model—"unlimited-with-rate-limits"—sounds like a dream, but what does it actually mean for developers? Let’s delve into Cursor’s official explanation, user reactions, and how you can truly optimize your workflow.

Cursor Pro Plan Rate Limits: Everything You Need to Know

Understanding how rate limits work is key to getting the most out of your Cursor Pro Plan. Cursor meters rate limits based on underlying compute usage, and these limits reset every few hours. Here’s a clear breakdown of what that means for you.

What Are Cursor Rate Limits?

Cursor applies rate limits to all plans on Agent. These limits are designed to balance fair usage and system performance. There are two main types of rate limits:

1. Burst Rate Limits:

- Allow for short, high-activity sessions.

- Can be used at any time for particularly bursty work.

- Slow to refill after use.

2. Local Rate Limits:

- Refill fully every few hours.

- Designed for steady, ongoing usage.

Both types of limits are based on the total compute you use during a session. This includes:

- The model you select

- The length of your messages

- The size of files you attach

- The length of your current conversation

How Do Rate Limits Work?

- All plans are subject to rate limits on Agent.

- Limits reset every few hours, so you can resume work after a short break.

- Compute usage varies: Heavier models, longer messages, and larger files will use up your limits faster.

What Happens If You Hit a Limit?

If you use up both your local and burst limits, Cursor will notify you and present three options:

- Switch to models with higher rate limits (e.g., Sonnet has higher limits than Opus).

- Upgrade to a higher tier (such as the Ultra plan).

- Enable usage-based pricing to pay for requests that exceed your rate limits.

Can I Stick with the Old Cursor Pro Plan?

Yes! If you prefer a simple, lump-sum request system, you can keep the legacy Pro Plan. Just go to your Dashboard > Settings > Advanced to control this setting. For most users, the new Pro plan with rate limits will be preferable.

Quick Reference Table

| Limit Type | Description | Reset Time |

|---|---|---|

| Burst Rate Limit | For short, high-activity sessions | Slow to refill |

| Local Rate Limit | For steady, ongoing usage | Every few hours |

User Reactions: Confusion, Frustration, and Calls for Clarity

Cursor’s new pricing model has sparked a wave of discussion—and not all of it is positive. Here’s what users are saying:

- Ambiguity: Many users complain that the documentation is vague. Phrases like “burst rate limits” and “local rate limits” are unclear without concrete numbers.

- Lack of Transparency: Developers want to know exactly how many requests they can make, how compute is measured, and how often limits reset. The absence of specifics has led to frustration.

- Comparisons to Other Tools: Some users compare Cursor’s new model to other AI tools with similar ambiguous rate limits, expressing concern that “unlimited” is just a marketing term.

Key Takeaway:

- The unlimited-with-rate-limits model offers more flexibility for average users, but power users may still hit walls — and it seems that everyone wants more transparency.

What Rate Limits Mean for Your Workflow: The Developer’s Dilemma

So, what does “unlimited-with-rate-limits” mean for your day-to-day coding?

- For most users: You’ll likely enjoy more freedom and fewer hard stops than before.

- For power users: You may still run into rate limits, especially during intense coding sessions or when using large models/files.

- For everyone: The lack of clear numbers makes it hard to plan or optimize your workflow.

If you hit a rate limit:

- You’ll see a message with options to switch models, upgrade, or pay for extra usage.

- You can always revert to the legacy plan for predictable quotas.

Rate Limit Scenarios

| Scenario | What Happens? |

|---|---|

| Light daily use | Rarely hit limits, smooth experience |

| Bursty coding sessions | May hit burst/local limits, need to wait |

| Heavy/enterprise use | May need Ultra plan or usage-based pricing |

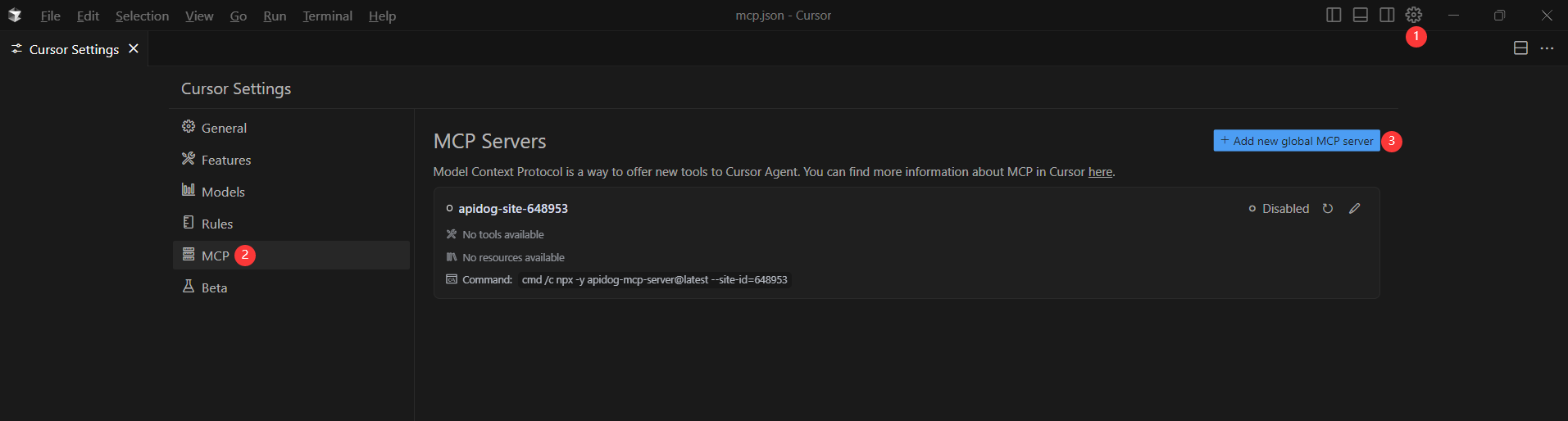

Pro Tip: If you want to avoid the uncertainty of rate limits and get more out of your API workflow, Apidog’s free MCP Server is the perfect solution. Read on to learn how to set it up!

Use Apidog MCP Server with Cursor to Avoid Rate Limit

Apidog MCP Server lets you connect your API specifications directly to Cursor, enabling smarter code generation, instant API documentation access, and seamless automation—all for free. This means Agentic AI can directly access and work with your API documentation, speeding up development while avoiding hitting the rate limit in Cursor.

Step 1: Prepare Your OpenAPI File

- Get your API definition as a URL (e.g.,

https://petstore.swagger.io/v2/swagger.json) or a local file path (e.g.,~/projects/api-docs/openapi.yaml). - Supported formats:

.jsonor.yaml(OpenAPI 3.x recommended).

Step 2: Add MCP Configuration to Cursor

- Open Cursor’s

mcp.jsonfile. - Add the following configuration (replace

<oas-url-or-path>with your actual OpenAPI URL or path):

For MacOS/Linux:

{

"mcpServers": {

"API specification": {

"command": "npx",

"args": [

"-y",

"apidog-mcp-server@latest",

"--oas=https://petstore.swagger.io/v2/swagger.json"

]

}

}

}For Windows:

{

"mcpServers": {

"API specification": {

"command": "cmd",

"args": [

"/c",

"npx",

"-y",

"apidog-mcp-server@latest",

"--oas=https://petstore.swagger.io/v2/swagger.json"

]

}

}

}Step 3: Verify the Connection

- In Cursor, switch to Agent mode and type:

Please fetch API documentation via MCP and tell me how many endpoints exist in the project.- If successful, you’ll see a structured response listing your API endpoints.

Conclusion: Don’t Let Rate Limits Hold You Back

Cursor’s shift to an “unlimited-with-rate-limits” model reflects a growing trend in AI tooling: offer flexibility without compromising infrastructure stability. For most developers, this change provides more freedom to work dynamically throughout the day, particularly those who don’t rely on high-volume interactions.

However, the lack of clear, quantifiable limits has created friction, especially among power users who need predictable performance. Terms like “burst” and “local” limits sound technical yet remain vague without concrete figures. Developers planning long, compute-heavy sessions or working on large files may find themselves unexpectedly throttled. And while options like upgrading or switching models are available, they still introduce an element of disruption to a smooth coding workflow.

The good news? You’re not locked in. Cursor allows users to stick with the legacy Pro plan if the new system doesn’t suit your needs. And if you want to supercharge your AI-assisted coding even further, integrating Apidog’s free MCP Server can help you bypass some of these limitations entirely. With direct API access, instant documentation sync, and powerful automation tools, Apidog enhances your productivity while keeping you in control.

With Apidog MCP Server, you can:

- Connect your API documentation directly to Cursor and other AI-powered IDEs

- Automate code generation, documentation, and testing—right inside your workflow

- Eliminate quota anxiety and rate limit surprises

- Enjoy a free, powerful tool that puts you in control